In the rapidly evolving landscape of artificial intelligence, where tools promise enhanced safety and efficiency, the line between protection and intrusion has never been thinner. A recent federal lawsuit filed by students against the Lawrence Public Schools district in Kansas underscores this precarious balance, serving as a cautionary tale for any business deploying AI-driven surveillance technologies. At the heart of the case is Gaggle Safety Management, an AI-powered monitoring system designed to scan student communications and files for signs of potential harm. What began as a well-intentioned effort to safeguard young lives has escalated into allegations of widespread privacy violations, erroneous flagging of innocuous content, and a profound chilling effect on creative expression. For business leaders—particularly those in education, but with ripples across sectors reliant on data-heavy operations this incident highlights the urgent need for robust AI governance frameworks to mitigate legal, operational, and reputational risks.

As law firms advising clients on data privacy compliance, you are uniquely positioned to guide organizations through these turbulent waters. The Gaggle saga is not an isolated anomaly but a harbinger of the regulatory scrutiny that awaits businesses ignoring the foundational principles of consent, transparency, and accountability in AI deployments.

The Incident: From Artistic Expression to Algorithmic Overreach

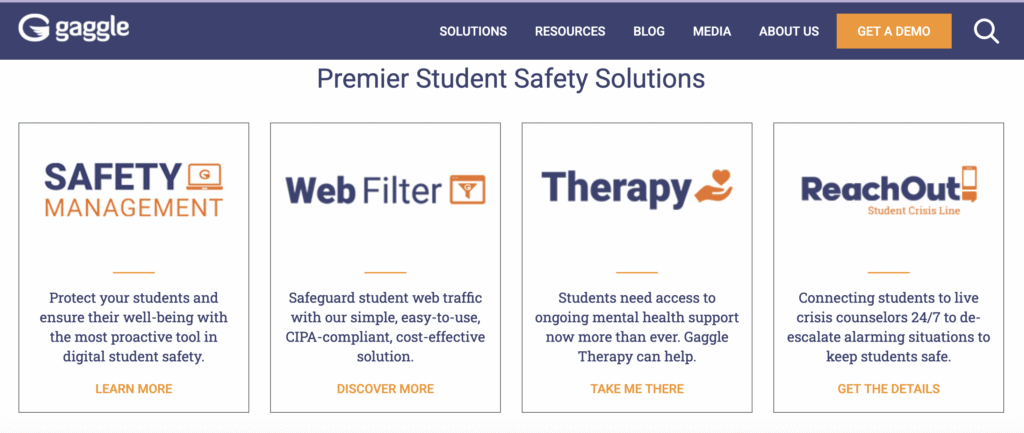

The controversy erupted at Lawrence High School and Free State High School in Lawrence, Kansas, where the district implemented Gaggle in 2023 under a three-year, $160,000 contract. Marketed as a proactive shield against threats like self-harm, substance abuse, cyberbullying, and violence, Gaggle integrates seamlessly with school-issued Google Workspace accounts, scanning emails, documents, shared drives, and even calendar entries in real-time. The system’s AI algorithms flag potentially concerning content, which is then reviewed by “trained safety professionals” allegedly outsourced third-party contractors—before alerting school administrators.

What should have been a routine artistic endeavor turned into a flashpoint. An art student’s digital portfolio, featuring works exploring themes of identity and emotion, was flagged by Gaggle as containing “pornographic” material. The AI’s misclassification led to the partial deletion of the portfolio without the student’s knowledge or consent, prompting immediate backlash. This was far from an isolated error; records obtained by a student journalist revealed that Gaggle flagged over 1,200 instances of student content in a single year, encompassing discussions on mental health, violence, drugs, and sex—many of which were benign or contextually appropriate.

Compounding the issue, the tool has been accused of deleting entire emails and documents preemptively, severing lines of communication between students and trusted adults like teachers or counselors. Student journalists, in particular, reported that their investigative notes on Gaggle itself were scanned and flagged, potentially exposing confidential sources in violation of journalistic protections under Kansas law. One former student, Natasha Torkzaban, articulated the pervasive anxiety: “Who else, other than me, is reading this document?” This sentiment echoes across the plaintiff group—comprising current and former students, including artists, photographers, and editors from the school newspaper—who filed the lawsuit in August 2025.

The district defends Gaggle vigorously, citing instances where the tool enabled life-saving interventions, such as alerting staff to credible suicide risks. Former Superintendent Anthony Lewis emphasized in a statement that the technology has “allowed our staff to intervene and save lives.” Gaggle, used by over 1,500 school districts nationwide, touts its “staunch commitment to supporting student safety without compromising privacy” on its website. Yet, the company has not publicly responded to the lawsuit’s specifics, leaving a void filled by mounting evidence of systemic flaws.

Legal Liabilities: Navigating a Minefield of Privacy Regulations

For business owners, the Gaggle lawsuit crystallizes the legal perils of deploying AI surveillance without ironclad compliance safeguards. The plaintiffs invoke violations of the First and Fourth Amendments, arguing that the program constitutes a “sweeping, suspicionless monitoring program” that chills free speech and enables unreasonable searches and seizures. Specifically, the seizure of student artwork without due process is framed as an overreach, transforming a safety net into a dragnet that undermines the very mental health objectives it purports to serve—by intercepting pleas for help directed at educators.

Beyond constitutional claims, the case implicates federal statutes like the Family Educational Rights and Privacy Act (FERPA), which mandates parental consent for accessing educational records and protects student data from unauthorized disclosure. Gaggle’s outsourcing of reviews to external contractors raises red flags under FERPA’s third-party disclosure rules, potentially exposing districts to fines up to $1.5 million per violation. State laws add further layers; Kansas’ shield laws for journalists, for instance, were allegedly circumvented when Gaggle scanned reporting notes, risking contempt charges or civil penalties for the district. There are also new pending children’s privacy acts like KOSA that may make this even more complex for any future ed-tech platforms.

Emerging AI-specific regulations amplify these risks. The European Union’s AI Act, effective from 2024, classifies high-risk AI systems like surveillance tools under stringent transparency and accountability requirements, including mandatory impact assessments and human oversight. In the U.S., while federal AI legislation lags, states like California (via the California Privacy Rights Act) and Colorado (with its AI accountability bill) demand consent mechanisms for automated decision-making that processes personal data. Non-compliance isn’t merely a slap on the wrist; it invites class-action suits, regulatory audits, and damages that can cripple mid-sized operations. The Gaggle plaintiffs seek a permanent injunction, compensatory and punitive damages, and attorney’s fees—remedies that could set precedents forcing businesses to overhaul their tech stacks overnight.

Law firms counseling clients must emphasize that these liabilities extend beyond education. Retailers using AI for employee monitoring, healthcare providers scanning patient communications, or even corporate firms tracking vendor interactions face analogous exposures under the Gramm-Leach-Bliley Act or HIPAA. A single erroneous flag can trigger discrimination claims if biases in AI training data disproportionately affect protected groups, as seen in broader probes revealing Gaggle’s inadvertent outing of LGBTQ+ students through flagged discussions of sexuality.

Operational and Reputational Fallout: The Hidden Costs of Surveillance Creep

The legal threats are overt, but the operational disruptions are insidious. In Lawrence, students report self-censoring in essays, emails, and collaborative projects, fostering an environment of perpetual caution that stifles innovation and engagement. For schools as businesses, this translates to diminished educational outcomes—lower participation in creative curricula, reduced trust in digital tools, and heightened administrative burdens from reviewing false positives. Over 1,200 flags annually demand significant staff time, diverting resources from core missions like teaching and curriculum development.

Reputational damage compounds these issues. The lawsuit’s publicity has painted the Lawrence district as intrusive and tone-deaf, eroding community trust and enrollment appeal. A joint investigation by the Seattle Times and Associated Press uncovered parallel data security lapses with Gaggle, where flagged content was temporarily exposed online in unsecured formats, inviting breaches under laws like the Children’s Online Privacy Protection Act (COPPA).Businesses ignoring such vulnerabilities risk not just lawsuits but boycotts, talent flight, and investor pullback in an era where ESG (Environmental, Social, and Governance) criteria increasingly scrutinize data ethics.

The chilling effect on creativity warrants particular scrutiny for business owners. In knowledge-driven sectors, where ideation fuels growth, algorithmic oversight can suppress divergent thinking. Employees or stakeholders may withhold bold ideas fearing misinterpretation, mirroring how Lawrence artists now second-guess their portfolios. This isn’t hyperbole; studies from the Brookings Institution highlight how pervasive monitoring correlates with 15-20% drops in creative output, directly impacting productivity metrics that boards and shareholders track closely.

The Rising Tide of AI Governance: Consent as the Cornerstone of Compliance

Against this backdrop, AI governance tools are surging as a critical trend, bridging the gap between innovation and accountability. Regulators and industry bodies, from the NIST AI Risk Management Framework to the OECD’s AI Principles, advocate for “human-centric” approaches that prioritize consent, explainability, and minimal data processing. Consent-based tools, in particular, are gaining traction: they embed user notifications, granular opt-ins, and audit trails into AI workflows, ensuring deployments align with privacy-by-design mandates.

Captain Compliance stands at the forefront of this movement, empowering businesses with leader-class solutions in data privacy management. Our cookie consent software ensures seamless compliance with GDPR, CCPA, and ePrivacy Directive requirements, while our cookie policy generators and privacy notice builders automate the creation of tailored, legally vetted documents. Extending this expertise to AI governance, our platform integrates consent mechanisms for surveillance tools—flagging data collection points, securing user agreements, and generating dynamic privacy notices that adapt to new AI regulations and privacy laws as they come out.

If you’re a lawyer reading this clients turn to you for counsel on AI rollouts and privacy compliance advice. Those clients need partners who can operationalize compliance, not just theorize it. Captain Compliance’s suite transforms abstract regulations into actionable workflows, from automated policy updates to real-time consent dashboards that preempt litigation.

Charting a Compliant Future: Why Action Now Matters

The Gaggle lawsuit, now unfolding in federal court, may resolve in injunctions or settlements, but its lessons endure. Businesses that treat AI surveillance as a plug-and-play solution invite chaos; those embedding governance from the outset thrive. The risks legal entanglements, operational drags, reputational scars are too grave to gamble with, especially as privacy laws evolve to address AI’s unique threats.

We are committed to equipping forward-thinking organizations with the tools to lead ethically in this space. We invite law firms, compliance leads, agencies, and business owners to explore how our solutions can safeguard your clients’ AI initiatives. Book a demo below to see firsthand how consent-based governance turns compliance from a burden into a competitive edge. In an era where data is the new oil, let’s ensure it’s handled with the precision it demands before the next headline hits too close to home.