AI governance is not just a compliance exercise.

Artificial intelligence governance can provide businesses with the certainty they need to continue to innovate with AI at scale, building faster, better, more reliable products that are trusted by both consumers and enterprise partners alike. AI enables global businesses to compete. It makes them faster, more efficient and more competitive. In order to adopt AI confidently, businesses need certainty. The field of work we are in focuses on how to give enterprises the certainty that their AI systems are accountable, trustworthy and safe, removing the barriers to their AI adoption so they can compete with enterprises that are already using AI to win business. Enterprises have adopted AI, and to stay competitive and continue using it, they now need to manage the risks at scale. Managing AI risk has truly become a reality for enterprises that must ensure compliance with hard regulations that have already come into force, such as the EU AI Act, as well as additional regulations that are already emerging in 2025, such as South Korea’s AI Act. The first penalties for noncompliance with AI-specific laws will begin to set a global precedent, forcing businesses to prioritize governance or face steep consequences.

Introduction: Why AI Governance Matters in 2025

As artificial intelligence continues its relentless march into every corner of business and society, the stakes for effective governance have skyrocketed. The AI Governance in Practice Report 2025, released by the International Association of Privacy Professionals (IAPP) in April 2025, serves as a vital compass in this turbulent terrain. This 63-page tome, authored by a team including Richard Sentinella, Joe Jones, Ashley Casovan, Lynsey Burke, and Evi Fuelle, synthesizes data from a spring 2024 survey of over 670 professionals across 45 countries and territories, enriched by seven in-depth case studies from pioneering organizations. It charts the evolution of AI governance from a nascent compliance afterthought to a strategic imperative, one that not only shields against risks but propels innovation in an era defined by the EU AI Act, South Korea’s impending AI Act, and a cascade of global regulations.

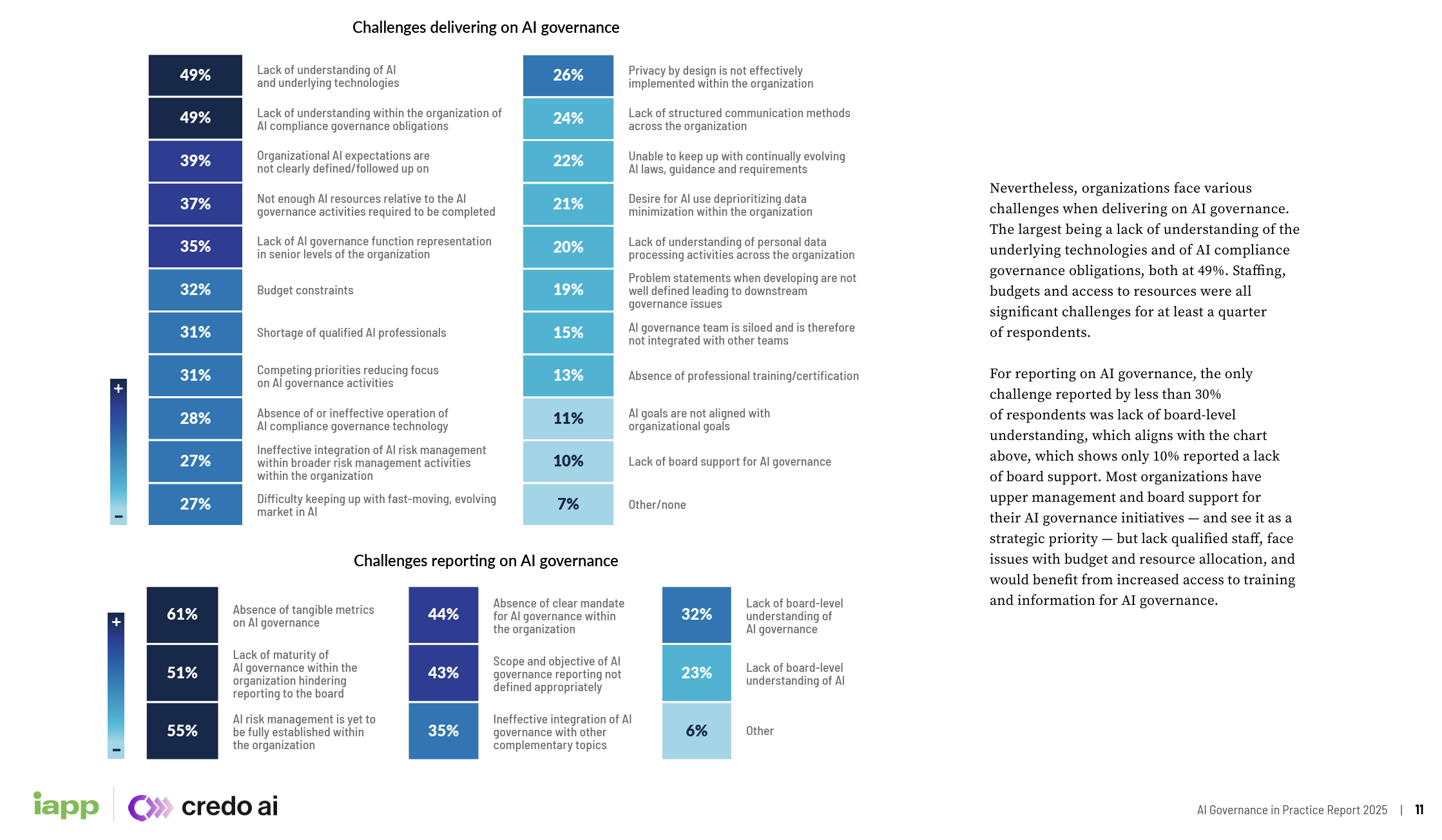

Challenges delivering on AI governance from the IAPP Report:

The report’s Foreword, co-authored by IAPP’s Joe Jones and Credo AI’s Evi Fuelle, strikes an urgent yet optimistic chord. “AI governance is not just a compliance exercise,” Jones and Fuelle declare. “Artificial intelligence governance can provide businesses with the certainty they need to continue to innovate with AI at scale, building faster, better, more reliable products that are trusted by both consumers and enterprise partners alike.” This isn’t hyperbole; it’s a recognition that AI’s promise—faster efficiencies, sharper insights, personalized experiences—hinges on trust. Without governance, enterprises risk not just fines but obsolescence. As Fuelle notes elsewhere, “AI governance is still evolving, and mature AI governance programs continue to find room to innovate.” The foreword positions 2025 as a watershed year, where governance draws lessons from cybersecurity and privacy’s maturation but carves out AI-specific paths, like managing model drift or socio-technical harms.

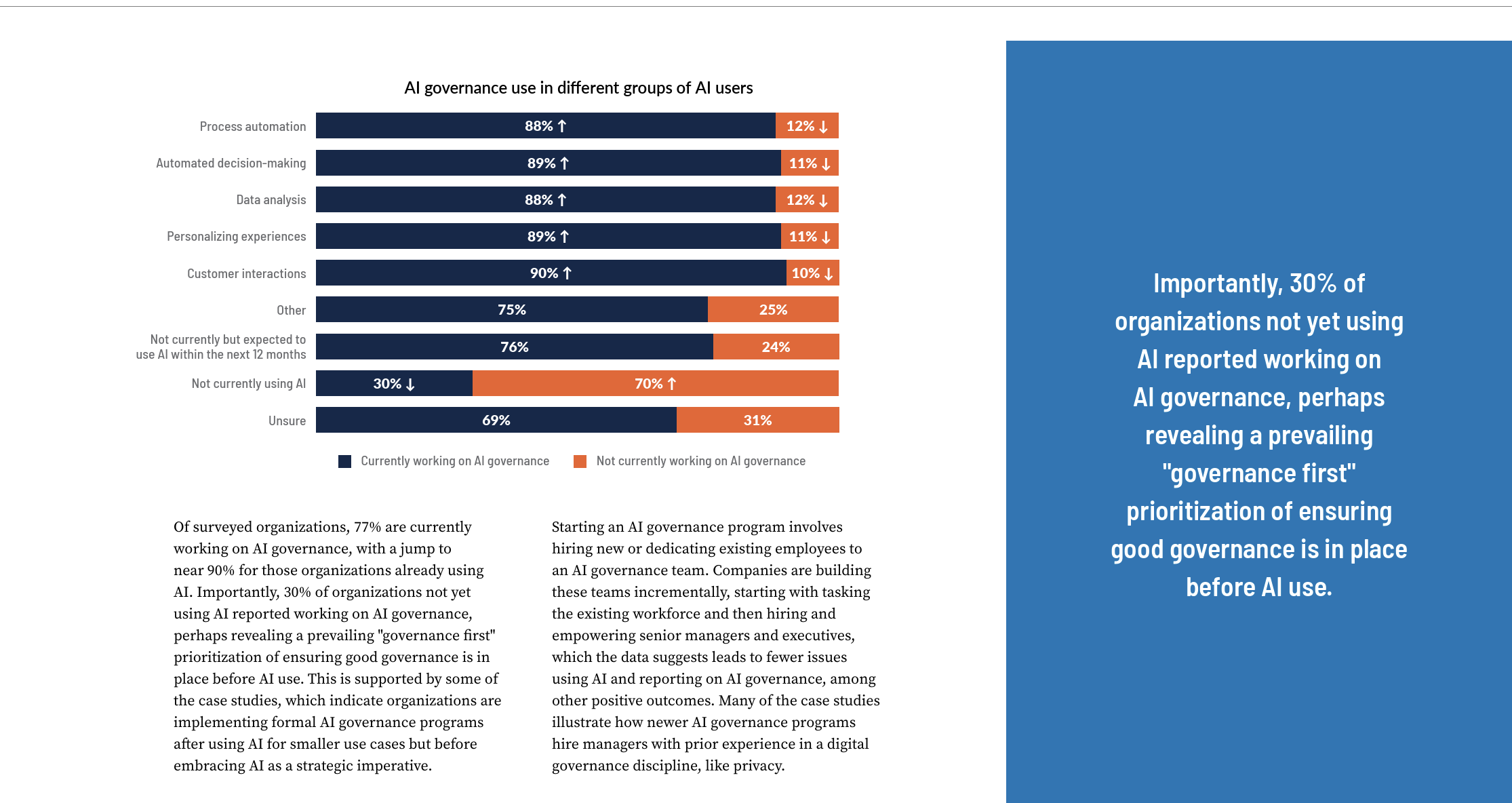

Diving into the Executive Summary (pages 5-9), the data paints a portrait of momentum tempered by hurdles. A staggering 77% of organizations are actively developing AI governance initiatives, a number that climbs to nearly 90% among those already deploying AI systems. Remarkably, even 30% of non-AI users are laying groundwork—a “governance first” strategy that anticipates adoption within 12 months (76% of non-users expect to integrate AI soon). This proactive stance is fueled by sociotechnical pressures and regulatory tsunamis, yet challenges loom: 23.5% cite a dearth of qualified talent, and 64% decry insufficient budgets. AI’s applications are ubiquitous—88% for process automation, 89% for automated decision-making, 88% for data analysis, 89% for personalized customer experiences, and 90% for customer interactions—yet only 18% feel their budgets suffice.

The survey’s methodology adds rigor: 25 AI-specific questions probed confidence levels (e.g., EU AI Act compliance), maturity indicators, and barriers, with statistical significance at 95% confidence. Case studies from North American and European firms—Mastercard, TELUS, BCG, Kroll, IBM, Randstad, and Cohere—complement this, revealing no one-size-fits-all model but shared threads like cross-functional “villages” of experts. As Navrina Singh, Founder & CEO of Credo AI, reflects: “AI governance is still evolving, and mature AI governance programs continue to find room to innovate.” This report isn’t just descriptive; it’s prescriptive, urging organizations to treat governance as an enabler, much like privacy by design unlocked compliant innovation a decade ago.

In this article, we unpack the report’s tripartite structure: building programs (Part I, pages 10-24), professionalizing the field (Part II, pages 25-36), and ensuring leadership/accountability (Part III, pages 37-61). We’ll weave in granular survey insights, vivid data visualizations, direct quotes, and case study narratives to offer a blueprint for 2025 and beyond. For WordPress users, this piece is optimized with subheadings, bolded stats, and calls-to-action—ready to engage your audience on the AI frontier.

Part I: Building an AI Governance Program – Foundations for Responsible Innovation

Part I lays the groundwork, illustrating how organizations construct AI governance amid hype and hazard. AI isn’t siloed; it’s woven into operations, with 47% ranking governance as a top-five strategic priority—rising to 58% for those actively building it. Priorities skew toward frameworks (27%), assessments (13%), and teams (8%), yet barriers persist: 49% grapple with AI tech comprehension and compliance fog, 39% face unclear expectations, 37% resource shortfalls, and 35% budget squeezes. Reporting woes compound this—61% lack board-ready metrics, 51% blame program immaturity, 55% undefined scopes, and 32% board AI illiteracy.

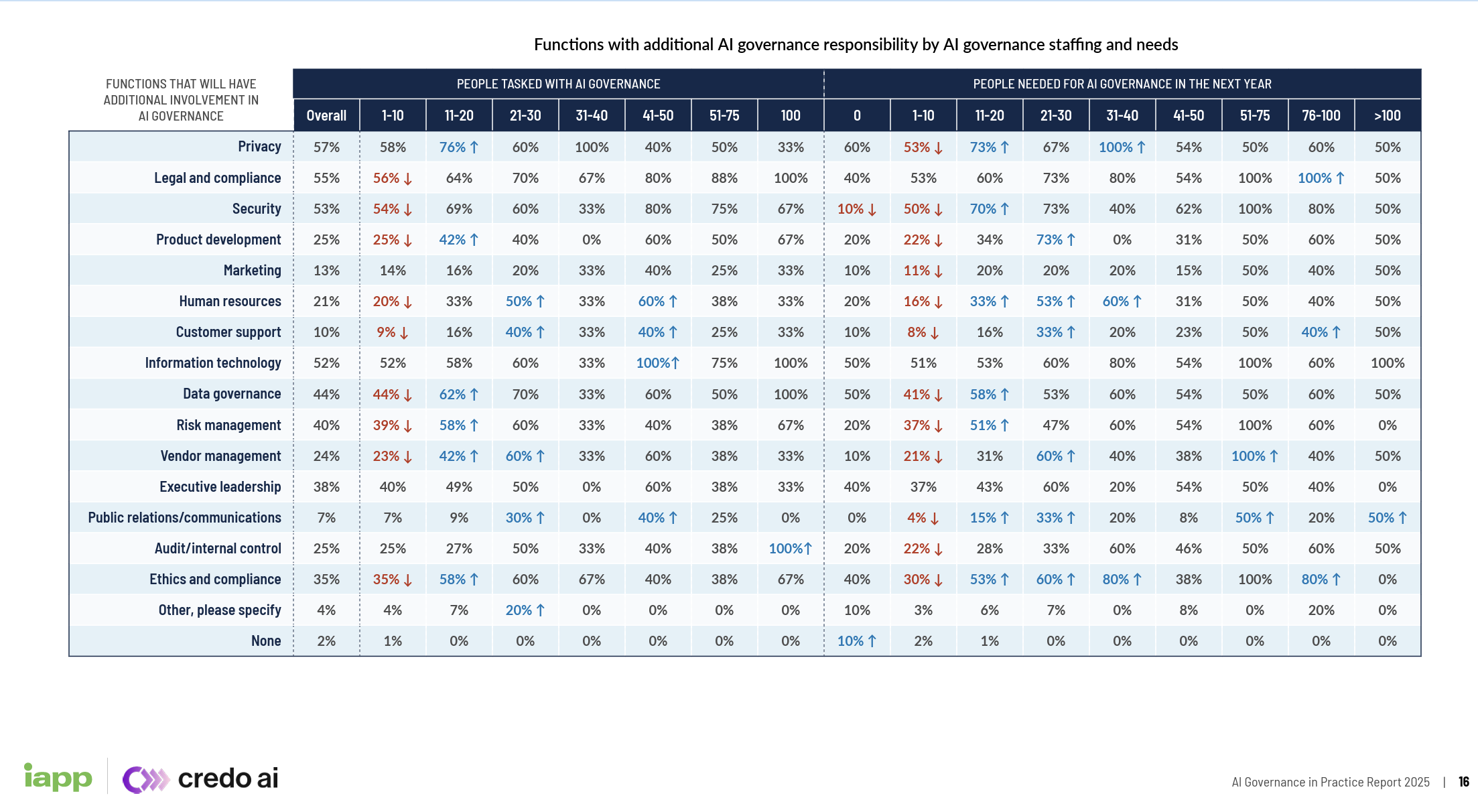

Functions are mobilizing: privacy (57%), legal/compliance (55%), security (53%), IT (52%), data governance (44%), risk (40%), and executives (38%) bear added loads. Larger teams (>11 pros) amplify this—privacy involvement hits 76% (11-20 staff) to 100% (>100). Committees, present in 39%, correlate strongly: 51% for automation users vs. 9% non-users, slashing mandate clarity issues by 41% and board gaps by 37-40%. Budgets? Dismal—18% sufficient, 64% inadequate—with <$100K tiers at 66% dissatisfaction, though $1-4.9M yields 27% approval. High-headcount firms (>80K) skew toward bigger spends.

Tackling Challenges: A Granular Breakdown

The report dissects challenges by function, revealing collaboration as antidote. Lacking AI understanding? Security (57%), IT (53%), privacy (60%) step up. No senior buy-in? Security (63%), legal (56%). Fuzzy expectations? Privacy (65%), legal (57%). Compliance voids? Security (60%), IT (57%). Talent hunts engage HR (23%), IT (52%). Budget woes pull risk (40%), data governance (44%). Resources? Vendor management (24%), audit (25%). Priorities clash? Executives (38%), ethics (35%).

Best Practices: Incremental, Collaborative Builds

No blueprint, but patterns emerge: inventory AI systems, triage by risk-benefit, embed in existing flows (e.g., adapt privacy impact assessments for bias/data leaks). “It takes a village,” the foreword echoes—leverage privacy, cybersecurity, IT, ethics, legal. Upskill via literacy for all, “purple teaming” (adversarial red-blue blends) for pros. Committees democratize; budgets scale to tools for drift monitoring. Quote from the report: “Organizations see collaboration… as a way to shore up areas of challenges.” Maturity markers: oversight bodies, clear comms, AI training. Flex for regs—2025’s Seoul Act will test this.

Case Studies: Blueprints from the Vanguard

Mastercard: Centralized, Risk-Integrated Mastery

Mastercard’s 2022 launch integrated AI into privacy/data strategies, birthing a lean team of lawyers, compliance officers, policy wonks, and data scientists. Reviews funnel through enterprise risk frameworks; high-impact (personal data, reputation) escalates to the AI and Data Council, co-chaired by chief privacy and AI/data officers. Ad-hoc experts (e.g., IP lawyers) plug gaps. Policies front-load screens; training instills Data & Tech Responsibility Principles. Outcome: 62% EU AI Act confidence for automation. “We have embedded responsible AI as part of our innovation process,” says Caroline Louveaux. Streamlined, scalable—small team punches above weight.

TELUS: Safety-First, Certified Innovation

TELUS’s Data & Trust Office champions “safety first,” layering AI atop Trust Model and ethics principles. A vetting platform scans external models; vendor pacts enforce data guards. Milestone: First generative AI tool snagged ISO privacy-by-design cert. Upskilling arsenal: AI literacy courses, data stewards for assessments, Purple Teams for robustness, Responsible AI Squad for audits. “To innovate well, you need safety,” captures the ethos. Aligned budgets yield high satisfaction, proving integration trumps isolation.

Boston Consulting Group (BCG): Bespoke Digital Risk Orchestration

BCG’s custom tool catalogs AI from ideation, mapping “risk landscapes” then full impacts across business, tech, security, data protection, legal, responsible AI. High-risk (e.g., child-involving) hits the Responsible AI Policy and Council—senior execs. Collaborative inputs: engineers spec tech, legal flags compliance; outputs birth “system cards.” “By having multiple teams interact… BCG has been able to find a common language around how risks are defined and actioned.” Regulatory-proof, it fosters real-time harmony.

These exemplars affirm: Start small, integrate deep, collaborate wide. By page 24, the report equips builders with tools to derisk without stifling.

Part II: Professionalizing AI Governance – Cultivating Expertise in an Emerging Field

Part II shifts to talent, where demand outstrips supply in this “evolving” discipline. Only 10% hire dedicated roles, but 78% plan 1-10 additions next year (avg. 9.8 hires), with North America eyeing 11-20 (79%). Strategic priority (47%) fuels this—50-100% for big hires. Seniors report to C-suite in small/mature setups, SVPs/VPs in scaled ones. Revenue dictates: <$100M favors 1-10 hires; >$1B, >21. Headcount: >80K eyes 51-75.

Recruitment ticks up with budgets—6% (<$100K) to 38% (>$5M)—from product dev (36%), ethics/compliance (21%). Talent gaps (23.5%) demand hybrids: AI smarts plus GRC, policy translation, red teaming. Structures vary: embed in compliance/ethics (50%), standalone, or dispersed—yielding inconsistent assessments. Over 50% foresee privacy (57%), legal (55%), security (53%), IT (52%) expansions. Pros hail from diverse fields; privacy often pivots.

Hiring Trends: Scaling Amid Scarcity

No 2025 boom, but specialization grows—large orgs formalize, smalls generalize. 8% overall recruiting; rises with maturity. Ideal: Digital governance vets (privacy) plus AI upskilling. Evolving skills: Model auditing, adversarial testing—demand up 20% YoY. No hierarchy norm; embed where strengths lie.

Structures and Training: From Generalist to Specialist

Embed in ethics/privacy/legal for synergy; split for coverage. Committees (39%) thrive in collaboratives—71% with ethics. Training: Certifications (IAPP’s AIGP), literacy pushes, deep dives in regs/risks. Hire adjacent, specialize—bridge law-to-tech.

Case Studies: Talent Forges Ahead

Kroll: Cross-Functional Risk Fusion

Kroll’s AI Risk practice mirrors client work: working group of lawyers, product/sales, data scientists, forensic techs, privacy pros. Tackles legal/tech threats—red teaming LLMs, IP purges. Tools quantify/mitigate for audits. Committees balance stakes. “Formalize cross-functional structures… integrate technical and legal skills.” Navigates talent crunch via internal advisory.

IBM: Privacy-Ethics Synergy

IBM’s Office of Privacy and Responsible Technology evolves from Chief Privacy Office, fusing AI into GDPR tools for assessments. Mosaic team—tech maintainers, legal, managers, compliance—ensures bias-free. AI Ethics Board policies; unit compliance speeds central oversight. Red teaming blends human-AI; global tools harmonize. “Leverage existing privacy infrastructure… build multidisciplinary teams.” High confidence across uses from this bedrock.

Professionalization: Upskill, not overhaul—scaffold for sustainability.

Part III: Leadership and Accountability – Steering the AI Ship

Part III elevates governance to strategy, dissecting seats (IT 17%, privacy/legal 22% each, data 10%, ethics 6%, security 5%) and impacts: Privacy yields 67% EU AI Act confidence (vs. IT’s 36%); ethics 74%. Legal peaks in decisions (26%), IT in data (28%). IT lags mandates (21% vs. 17%); data governance integration.

Reporting: General counsel (23%), CEO (17%), CIO (14%). Privacy to counsel (34%), legal self (56%), IT to CIO (43%). SVP confidence: 71% (20% above avg). SVPs collaborate more—privacy 20%, product 25%. Challenges: Board savvy void (privacy 57-66%), metrics (legal 55-61%), scopes (IT 52-59% ↑).

Additional duties: Privacy 57%, etc.—interdisciplinary. Cross-collabs high (privacy-executive 83%). Committees (39-71%) mitigate.

Randstad: Ethical, Cross-Border Navigation

Randstad seats in legal/data protection + ethics; working group ops, steering committee policies, advisory board ethics. EU Act flags recruitment high-risk; Colorado echoes. “Unique approach” for regulated agility—dual organs balance speed/culture.

Cohere: Embedded Responsible ML

Cohere’s Responsible ML team blends safety modelers, security, preprocessors with legal/policy/product/compliance. NIST/CSAI standards; on-prem secures downstream. Bias/misinfo combat via multilingual; Safety Framework aligns Canada/U.K./Seoul. “Practical AI solutions” upstream-build trust.

Leadership: Empower seniors, committees oversee, functions execute. Accountability: Transparency-aligned.

Deeper Dive: Cross-Cutting Themes and Data Visualizations

The report’s visuals—bars, stacks, arrows—demystify trends. E.g., “Functions with additional AI governance responsibility by challenges” charts privacy’s 57-66% (↑), security’s 53-61% (↑). Revenue slices: Larger ($1-8.9B) privacy 33%, small none 44%. Headcount: 1K-5K privacy 29%, <100 none 11%. EU confidence bars: Product 66%, HR 68%, none 22% not confident (14% overall).

Reporting challenges viz: Privacy 57-66% board gaps, legal 55-61% metrics. These aren’t static; they guide: Collaborate to shore weaknesses.

Themes: No monolith—tailor to size (smalls slow-start, larges formal), motives (compliance vs. efficiency). Leverage privacy for AI uniques (hallucinations via cyber). Mature: Oversight, comms, literacy. “AI governance is not a one-size-fits-all approach… each organization ends up with a structure that fits its unique challenges.”

Conclusion: Charting the Course Ahead

Synthesizing (pages 61-62), patterns emerge: Integrated teams, size-adapted, reg-flexible. No universal; leverage privacy/compliance, address AI risks via CS/model experts. Mature hallmarks: Bodies, reporting, training.

Recaps: 39% committees, 52% AI Act confident. Recommendations: Inventory/prioritize risks, adapt processes, upskill cross-discipline, flex for guidance. “As more legislation… organizations will likely feel more of a push… AI governance can also be a catalyst for enterprise growth, enhancing brand credibility.”

2025-2026: Regs proliferate, but governance derisks, catalyzes. 78% staffing up; benchmark, share. “Businesses that are unable to adopt AI are left behind.” Yet, with responsible AI by design, scale awaits. For the full report click here.