Privacy warning about Nuance Communications and how your AI startup can avoid expensive regulatory actions & lawsuits by standing up the Captain Compliance privacy software to protect your business.

Imagine this: You’re calling your bank to check your balance, your voice a hurried murmur over a crackling line. “Yes, it’s me,” you say, authenticating yourself with a few simple words. The system hums approvingly, and you hang up, none the wiser that those fleeting syllables have been captured, digitized, and archived—your unique vocal fingerprint now a permanent resident in a corporate vault, potentially forever. This isn’t dystopian fiction; it’s the reality at the heart of a simmering privacy battle unfolding in America’s courtrooms, one that pits everyday consumers against the invisible machinery of AI-driven surveillance. At the epicenter stands Nuance Communications, a once-obscure tech giant whose voice-recognition wizardry powers everything from bank verifications to hospital dictations. But as of November 2025, Nuance’s story is no longer just about innovation it’s a cautionary tale of unchecked data hunger, cascading breaches, and the fragile line between convenience and consent.

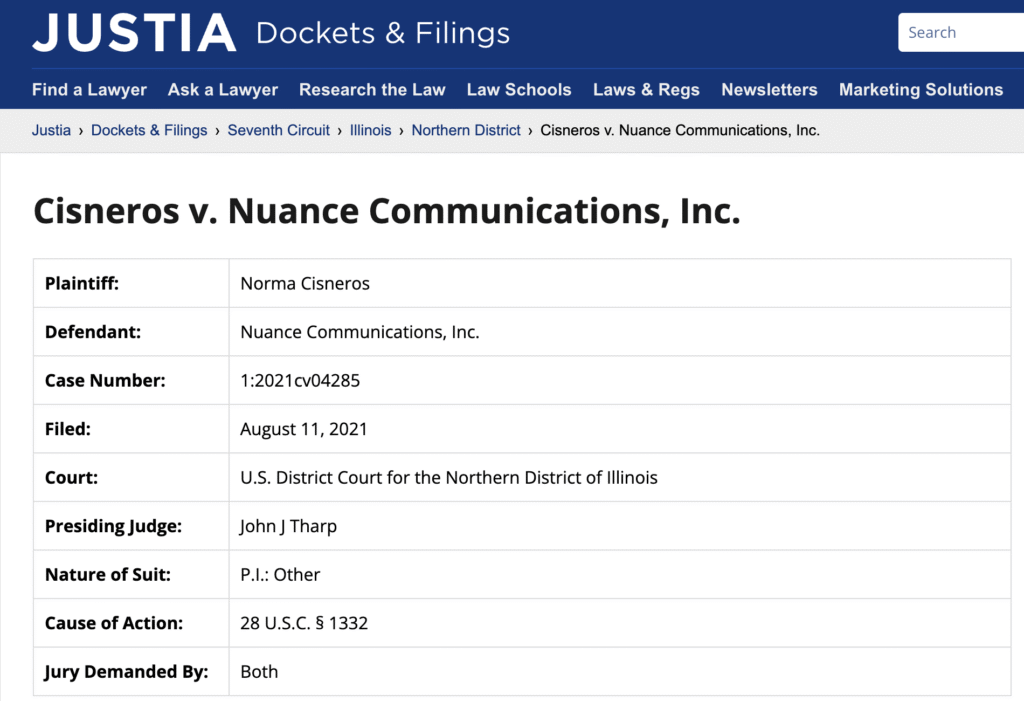

The Spark: Norma Cisneros and the Rise of the BIPA Challenge

Our story begins in the sterile glow of a Chicago courtroom in 2021, but its roots burrow deeper into the biometric gold rush of the early 2010s. Norma Cisneros, an unassuming Illinois resident and Charles Schwab customer, became the unlikely protagonist of this saga. Like millions, she used Schwab’s phone-based identity verification service, blissfully unaware that each call fed her voice into Nuance’s maw. Nuance, then an independent powerhouse in conversational AI, had inked a deal with Schwab to deploy its voice biometrics tech software that analyzes speech patterns to confirm users with eerie accuracy. But Cisneros alleged something far more insidious in the lawsuit: Nuance wasn’t just listening; it was hoarding. Without her knowledge or consent, her voiceprint a digital template of her vocal timbre, pitch, and cadence—was extracted, stored, and retained in Nuance’s databases, potentially for years. This, she claimed, violated Illinois’ groundbreaking Biometric Information Privacy Act (BIPA), a 2008 law born from fears of a fingerprint-scanning dystopia after Super Bowl face-recognition scandals rocked public trust.

Understanding BIPA: A Privacy Pioneer Under Siege

BIPA was no ordinary privacy shield. Unlike the toothless federal patchwork that leaves most data practices in a Wild West of self-regulation, it demands explicit written consent before companies collect, store, or share biometrics—your iris scans, fingerprints, or, crucially, voiceprints. Violations? Up to $5,000 per negligent act, $15,000 per intentional one, plus attorney fees and this is in line with the ECPA & CIPA Lawsuits we’ve been covering that are exploding. In this case not surprisingly it’s spawned a litigation tsunami, transforming consumer privacy into a multi-billion-dollar battleground.

- Key Provisions of BIPA:

- Requires written consent for biometric data collection.

- Mandates public policies on data retention and destruction.

- Prohibits sale or disclosure without permission.

- Landmark Impacts: Over 1,700 BIPA suits were filed by 2023, targeting tech behemoths and reshaping data practices.

Notable BIPA cases illustrate the law’s reach:

- Facebook (2015): Sued for creating face maps from photo tags without consent; settled for $650 million in 2021.

- TikTok (2020): Accused of scanning faces via filters; ongoing class action highlights app-based biometrics risks.

- Clearview AI (2021): Scraped billions of faces from the web; faced multiple BIPA suits leading to temporary Illinois bans.

- Shutterfly (2016): Photo printing service hit for facial recognition; settled for $4.5 million.

Cisneros’ suit, filed as a class action on behalf of potentially millions of Schwab callers, argued Nuance had flouted these rules wholesale. They didn’t just collect without permission; they lied about deletion policies, keeping voice data long after any “need” expired. In her complaint, Cisneros painted a picture of perpetual vulnerability: What if hackers breached the vault? What if Nuance sold her voiceprint to advertisers, or worse, authoritarian regimes experimenting with deepfake audio?

The Courtroom Clash: Seventh Circuit’s Skeptical Spotlight

Nuance fired back with corporate armor. As a vendor to a financial giant like Schwab, they invoked the Gramm-Leach-Bliley Act (GLBA), a 1999 banking privacy law that carves out exemptions for financial institutions handling customer data. Nuance positioned itself as an extension of Schwab’s operations—its voice tech wasn’t a rogue collection; it was essential “financial activity.” A federal district judge in Chicago bought it, dismissing the case in 2024 with a ruling that echoed through boardrooms: Vendors could hide behind their clients’ shields, diluting BIPA’s bite.

But Cisneros appealed, hauling the fight to the Seventh Circuit Court of Appeals in Chicago. Oral arguments on October 27, 2025, crackled with judicial skepticism, a three-judge panel—spanning Reagan, Trump, and Biden appointees—probing like surgeons. Judge Frank Easterbrook, the panel’s elder statesman, zeroed in on the existential core: injury. “What’s the harm in keeping a secret you already know?” he pressed Cisneros’ lawyer, Michael Wise, likening voice retention to a doctor remembering a patient’s ailment without jotting it down. Wise countered fiercely: Under BIPA, the harm is the retention—a continuing invasion, like squatting in your home after the lease ends. Nuance’s own policies promised deletion post-use, yet data lingered, ripe for abuse. Nuance’s counsel, Annie Kastanek, leaned on GLBA’s broad umbrella but faltered when pressed on standing—the constitutional requirement that plaintiffs show concrete, particularized harm, not just statutory violations.

The judges weren’t sold. “This feels like a technicality chase,” Easterbrook mused, echoing a post-TransUnion (2021) Supreme Court trend demanding “real-world” injuries for class actions. They ordered supplemental briefs on standing within two weeks, leaving the case in limbo as of early November 2025. The ripple? Immediate. A federal judge in August 2025 paused a parallel BIPA suit against Coinbase—alleging the crypto exchange slurped facial scans via third-party verifiers—awaiting the Seventh Circuit’s word on whether vendors like Nuance (or its ilk) get a free pass.

Potential Outcomes and Broader Ramifications

- If Cisneros Wins:

- Forces consent mechanisms in voice biometrics, like mid-call disclosures.

- Unleashes suits against AI vendors in finance, healthcare, and beyond.

- Strengthens BIPA as a model for national biometric laws.

- If Nuance Prevails:

- Widens vendor exemptions, shielding third-party AI from liability.

- Encourages more “always-on” surveillance in essential services.

- Highlights need for federal reforms to close GLBA-BIPA gaps.

If Cisneros loses, BIPA’s vendor loophole widens, shielding AI middlemen in an era when voice biometrics underpin everything from Amazon’s Alexa to Uber’s driver checks. Win, and it could unleash a fresh wave of suits, forcing consent pop-ups mid-call.

Nuance’s Shadowy Past: Breaches, Complaints, and the Microsoft Merger

This courtroom cliffhanger isn’t isolated it’s the latest verse in Nuance’s ballad of privacy pratfalls, a tune that started harmonizing with broader tech horrors long before Cisneros picked up the phone. Founded in 1992, Nuance rocketed from speech-to-text pioneer (think Dragon NaturallySpeaking, the dictation tool that freed typists’ fingers) to AI overlord, its tech woven into 77 of the top 100 U.S. hospitals and countless call centers. But power bred peril.

Early Warnings: From Toy Dolls to Always-On Ears

As early as 2016, the Electronic Privacy Information Center (EPIC) dragged Nuance into an FTC complaint alongside Genesis Toys, accusing them of surreptitiously harvesting kids’ voices from internet-connected dolls—audio snippets beamed to servers without parental nod, a creepy precursor to today’s smart toys. Echoes of Amazon’s 2019 admission that Alexa workers reviewed raw audio snippets? Or Google’s 2020 blunder where Assistant recordings were transcribed by humans? Nuance was there first, normalizing the “always-on ear” in an age of surveillance capitalism, where voices become commodities traded in shadows.

The Breach Cascade: Data Leaks That Shook Trust

Then came the breaches—catastrophic, compounding failures that make the Schwab suit look like a footnote.

- 2016 FTC Complaint: Kids’ voices from smart toys harvested without consent.

- June 2023 MOVEit Hack: Exposed 1.2 million patients’ data, including names, addresses, and medical notes from partners like Geisinger Health.

- 2024 Insider Theft: Rogue ex-employee stole over 1 million Geisinger records in a vengeful data dump.

Nuance swore its core systems were untouched in the MOVEit incident, but the fallout scorched: Class actions piled up, regulators circled, and trust eroded. It was a grim reprise of Equifax’s 2017 mega-breach (147 million exposed) or the 2021 Colonial Pipeline ransomware, underscoring how third-party vendors like Nuance are the soft underbelly of critical infrastructure. In healthcare, where Nuance’s Dragon Medical scribes 500 million clinical encounters yearly, these lapses aren’t abstract—they’re lives upended, from identity theft to stigma over leaked HIV diagnoses. Nuance’s response? Notifications, audits, but no seismic shift, mirroring Anthem’s 2015 breach of 78 million health records or Change Healthcare’s 2024 ransomware saga that paralyzed U.S. pharmacies.

The Microsoft Acquisition: Amplifying the Privacy Hydra

Enter Microsoft, the acquisition that supercharged Nuance’s reach—and its risks. In April 2021, Satya Nadella dropped $19.7 billion to swallow Nuance whole, touting it as a healthcare AI moonshot: Azure clouds humming with voice analytics to “transform care.” By March 2022, the deal closed amid EU and UK antitrust probes over market dominance in speech tech. On paper, it was synergy: Microsoft’s scale bolstering Nuance’s HIPAA-compliant vaults. In practice? A privacy hydra. Post-merger, Nuance data flows into Microsoft’s ecosystem, where privacy policies coyly note transfers in “asset sales” could expose info to new overlords. Imagine your Schwab voiceprint mingling with your Outlook emails or Teams calls—cross-pollination ripe for profiling.

Threads to the Bigger Picture: Nuance in the Privacy Apocalypse

This merger amplifies the stakes, threading Nuance’s sins into the tapestry of Big Tech’s privacy apocalypse. Recall Cambridge Analytica’s 2018 unmasking of Facebook’s data firehose, weaponized for psyops? Or Apple’s 2021 sideloading fights, shielding iPhones from app-store snoops? Nuance under Microsoft embodies the healthcare variant: AI that “helps” doctors but hoards patient voices for training models, echoing Google’s 2019 Project Nightingale, where it slurped NHS records sans full consent, sparking fury over “data colonialism.” In voice biometrics, the perils multiply—deepfakes forged from stolen prints could impersonate you in calls, as ElevenLabs’ 2023 demos chilled experts. And with BIPA’s vendor exemption in play, Microsoft’s empire (now powering Copilot health advisors) could sidestep scrutiny, much like Amazon’s Ring doorbells evaded COPPA kids’ privacy rules until FTC slaps in 2023.

The Road Ahead: Reforms, Risks, and the Fight for Silence

As November 2025 wanes, Cisneros v. Nuance hangs in judicial balance, a microcosm of our fraying digital compact. Will courts demand “injury” beyond the chill of non-consent, or recognize retention as the theft it is? Broader reforms loom California’s CPRA expansions, EU’s AI Act classifying biometrics as “high-risk,” even Biden’s 2023 executive order on AI safety nodding to privacy. Yet without enforcement teeth, Nuance’s saga warns: In the race for ambient intelligence, your voice isn’t yours alone. It’s echo, archived, and ever-ready for the next bidder. Cisneros fights for deletion rights; we all fight for silence in the storm. The Seventh Circuit’s whisper could be the thunder that finally wakes the giants or lets them hum on, unheard.