This month there was a “McBreach” on the McHire AI platform. While this was a shocking security breach for McDonald’s executives when the chatbot exposed the personal information of approximately 64 million job applicants worldwide. The breach occurred on the McHire platform, powered by an AI chatbot named Olivia, developed by Paradox.ai. Security researchers Ian Carroll and Sam Curry uncovered that a simple username and password combination of “123456” granted access to the platform’s backend, revealing names, email addresses, phone numbers, and chat logs. This incident not only highlights critical vulnerabilities in AI-driven systems but also raises broader questions about automated decision-making, data privacy laws, and the risks of AI misuse in industries like banking. Now we will see if there are CCPA Private Right of Action suits considering this is a major McDonald’s data breach. How will these implications, and the evolving landscape of AI regulations, including fines for privacy violations and lessons from McDonald’s past legal troubles, such as the infamous hot coffee lawsuit play out from here?

The McHire Breach: A Case Study in Security Negligence

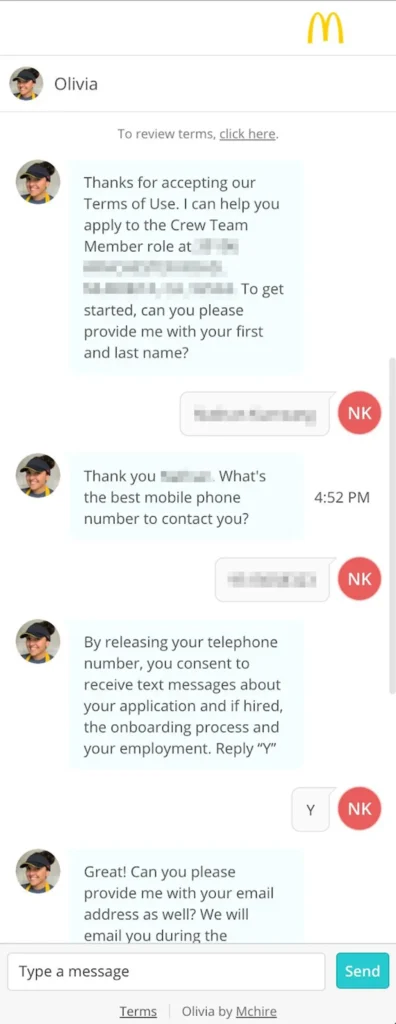

The McHire platform, used by over 90% of McDonald’s 40,000+ restaurants worldwide, relies on Olivia to streamline recruitment by screening applicants, collecting résumés, and administering personality tests. However, basic security oversights such as a test account with the password “123456” and no multi-factor authentication (MFA)—allowed researchers to access 64 million applicant records in just 30 minutes. An Insecure Direct Object Reference (IDOR) vulnerability further enabled access to any applicant’s data by modifying a URL parameter, exposing sensitive information like authentication tokens and chat logs.

The breach’s scale is staggering, affecting job seekers across multiple countries. While Paradox.ai claimed only a fraction of records contained sensitive data, the potential for harm is significant. Exposed information could be used for phishing scams, payroll fraud, or identity theft, as applicants eagerly awaiting job responses are prime targets for impersonation schemes. McDonald’s and Paradox.ai acted right away luckily to address the vulnerability by June 30, 2025, and Paradox.ai launched a bug bounty program to prevent future issues. However, the incident underscores a critical lesson: AI systems handling personal data must prioritize robust cybersecurity and data security practices. Had they done more critical privacy impact assessments and training this whole ordeal could have been used. McDonalds would be highly recommended to work with a company like Captain Compliance who could’ve helped them avoid this issue that may end up costing them hundreds of millions of dollars in litigation and fines.

McDonald’s History of Risk: The Hot Coffee Lawsuit

This is not McDonald’s first brush with public scrutiny over negligence. The 1994 hot coffee lawsuit, where Stella Liebeck sued McDonald’s after suffering severe burns from scalding coffee, remains a landmark case. Liebeck was awarded $2.86 million (later reduced) due to McDonald’s reckless disregard for customer safety, as the company served coffee at dangerously high temperatures despite knowing the risks. This case should have made McDonald’s risk-averse, yet the McHire breach suggests persistent lapses in oversight, now in the digital realm. Just as serving unsafe coffee led to legal fines and copycats, failing to secure sensitive applicant data could expose McDonald’s to lawsuits, fines, and eroded trust that will cost far more than the Liebeck litigation ever did. The company’s history should serve as a reminder to prioritize rigorous risk management, especially when adopting cutting-edge technologies like AI and to ask Captain Compliance or your data privacy software company for advice on navigating tricky situations.

Automated Decision-Making: Opportunities and Risks

The McHire platform exemplifies the growing reliance on automated decision-making (ADM) systems, where AI algorithms screen, evaluate, and filter candidates. These systems promise efficiency but introduce significant risks, particularly when poorly secured or biased. The McHire breach exposed how AI systems can become attack vectors if backend infrastructure lacks basic protections like strong passwords, MFA, and regular audits.

Beyond security, ADM systems can perpetuate bias. For instance, if Olivia’s personality tests or screening algorithms favor certain traits, they may inadvertently discriminate based on age, gender, or socioeconomic status. In 2023, the U.S. Equal Employment Opportunity Commission (EEOC) settled a lawsuit against iTutorGroup for $365,000 after its AI-driven recruitment tool automatically rejected applicants over 55 (women) and 60 (men), violating the Age Discrimination in Employment Act. Such cases highlight the need for transparency and fairness in ADM systems, as opaque algorithms can lead to discriminatory outcomes.

AI Risks in Banking: Denying Applicants

The risks of ADM extend beyond recruitment to industries like banking, where AI is increasingly used to evaluate loan and credit card applications. In 2023, the Berlin Data Protection Authority fined a bank €300,000 for failing to transparently inform a candidate why their credit card application was rejected by an AI system. Automated rejections without clear explanations violate data protection principles, leaving applicants vulnerable to unfair treatment.

AI-driven denials in banking can also amplify bias. Algorithms trained on historical data may reject applicants from marginalized communities due to biased training data, perpetuating systemic inequalities. In the U.S., the Consumer Financial Protection Act protects against deceptive AI practices, as seen in a 2022 case where Hello Digit was fined for misleading claims about its AI tool, which led to overdrafts and withheld interest. These examples underscore the need for robust oversight to ensure AI systems are fair, transparent, and secure.

Data Privacy Laws and AI Regulation

United States

In the U.S., data privacy laws vary by state, creating a patchwork of regulations. The California Consumer Privacy Act (CCPA), enacted in 2020, grants consumers rights to access, delete, and opt out of data sharing. Violations can lead to fines, as seen in a hypothetical scenario where a breach like McDonald’s could trigger penalties for non-compliance. Illinois’ Biometric Information Privacy Act (BIPA) imposes strict rules on collecting biometric data, with fines up to $5,000 per violation. Federal laws like HIPAA and HITECH also impose penalties for data breaches, with fines ranging from $100 to $1.5 million depending on the breach’s scale.

AI-specific regulations are emerging. New York City’s Local Law 144 (2022) requires employers to audit AI hiring tools for bias and disclose their use to candidates. Other states, like Colorado and Illinois, are developing similar laws to ensure transparency in ADM systems. These regulations aim to mitigate risks like those seen in the McHire breach, where poor security exposed millions to potential harm.

European Union

The EU’s General Data Protection Regulation (GDPR), effective since 2018, sets a global standard for data privacy. It mandates strong security measures, transparency, and consent for data processing. Violations can result in fines of up to €20 million or 4% of annual global revenue, whichever is higher. In 2022, Clearview AI was fined €20 million by Italy’s Data Protection Authority for non-consensual biometric data collection, highlighting GDPR’s enforcement power. The McHire breach, if it involved EU citizens, could trigger similar penalties, especially given the lack of MFA and weak credentials.

The EU AI Act, finalized in 2024, further regulates AI systems. It classifies AI applications by risk, with high-risk systems like those used in hiring subject to strict requirements, including transparency, accountability, and security. Non-compliance can lead to fines up to €35 million or 7% of global revenue. The McHire platform, as a high-risk AI system, would need to comply with these rules, making the breach a potential violation.

International Fines for AI and Privacy Misuse

Globally, regulators are cracking down on AI and privacy violations. In 2020, the UK’s Information Commissioner’s Office (ICO) fined CRDNN Ltd £500,000 for breaching privacy regulations with AI-driven communications. In 2022, Meta was fined €1.2 billion by Ireland’s Data Protection Commission for GDPR violations related to data transfers, demonstrating the high cost of non-compliance. These cases illustrate the growing scrutiny of AI systems and the need for companies to prioritize security and compliance.

Lessons for McDonald’s and AI Risks For Corporate Giants

The McHire breach is a cautionary tale for companies adopting AI and this one is a cybersecurity and a data privacy risk all in one. First, basic cybersecurity practices—strong passwords, MFA, and regular audits are non-negotiable. The use of “123456” as a password reflects a failure of oversight that could have been prevented with minimal effort with a Captain Compliance privacy impact assessment conducted for a new software tool. McDonalds likely did not have a privacy partner who would have advised them of this risk and thus prevented this from ever happening. Second, third-party vendors like Paradox.ai must be held to the same security standards as internal systems. McDonald’s swift response and Paradox.ai’s bug bounty program are steps in the right direction, but proactive measures are essential.

Third, companies must consider the broader implications of ADM. AI systems like Olivia collect vast amounts of data, making them prime targets for hackers. Robust encryption, access controls, and secure coding practices are critical to protect sensitive information. Finally, compliance with data privacy laws and AI regulations is not optional. The McHire breach could expose McDonald’s to fines under numerous privacy laws and AI act violations especially if affected applicants pursue legal action which given the sizwe of McDonald’s they do have a target on their back when mishaps like this happen for not properly handling 3rd party risk.

McHire Data Breach Privacy Lesson Learned

The McDonald’s McHire breach serves as a stark reminder that AI’s benefits come with significant risks. The exposure of 64 million applicants’ data due to a laughably weak password underscores the need for robust cybersecurity in AI-driven systems. As industries like banking increasingly rely on ADM, the risks of bias, opaque decision-making, and data breaches grow. Emerging regulations like the EU AI Act and U.S. state laws aim to address these challenges, but compliance requires proactive effort. McDonald’s history with the hot coffee lawsuit should have instilled a culture of risk aversion, yet the McHire breach suggests gaps remain. By prioritizing security, transparency, and compliance, companies can harness AI’s potential while safeguarding user trust and avoiding costly fines. The McHire incident is a wake-up call for the tech industry: innovation must not come at the expense of privacy and security and if you’re a major enterprise you need to be working with CaptainCompliance.com to avoid costly issues like this.