Researchers have uncovered a troubling amount of personal information lurking in one of the largest open-source datasets used to train AI models. The dataset, known as DataComp CommonPool, pulls together 12.8 billion image-text pairs scraped from the web between 2014 and 2022 through the nonprofit Common Crawl. Launched in 2023 for research but open to commercial use, it’s been downloaded more than 2 million times to help build generative image tools.

This finding, highlighted in a recent Eileen Guo piece over at MIT Technology Review, shines a light on the dangers of scraping the internet without enough checks in place and it’s a wake-up call for anyone worried about data privacy and staying compliant with the law.

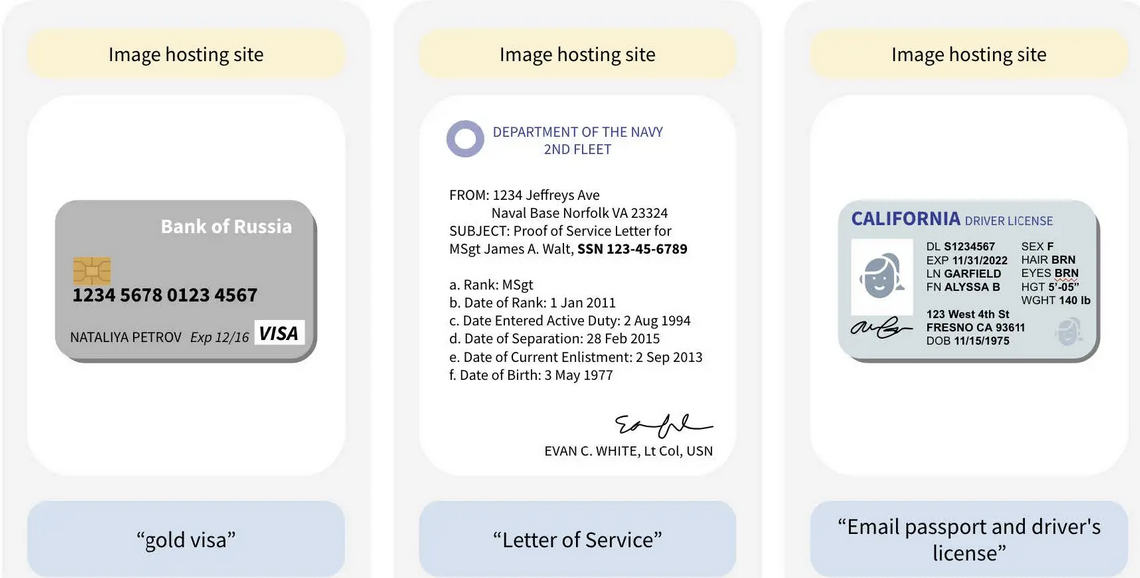

The study, led by folks like William Agnew, a postdoctoral fellow in AI ethics at Carnegie Mellon University, and Rachel Hong, a PhD student in computer science at the University of Washington, only looked at 0.1% of the dataset. Even so, they found thousands of real identity documents like passports, driver’s licenses, birth certificates, and credit cards plus over 800 job applications, including résumés and cover letters.

Scaling that up, the team figures there could be hundreds of millions of images with personal info in the whole set, including over 102 million faces that slipped past automatic blurring. Those résumés alone spilled details like disability info, background checks, racial data, government IDs, home addresses, and even info about family members’ birthplaces. As Agnew points out, “Anything you put online can be and probably has been scraped,” showing how our everyday online shares can end up in AI without us knowing.

This isn’t the first time it’s similar to problems with datasets like LAION-5B, which has been called out for holding private medical records, child sexual abuse material, and biased stuff that fuels discrimination. Back in 2022, investigations found sensitive health images in LAION-5B, and a 2023 report confirmed links to illegal content, sparking demands for better oversight and openness.

These datasets, often hosted on sites like Hugging Face with tools to remove data, reveal how AI training can pull in info without permission, putting user safety at risk.

Privacy Implications and Risks: A Compliance Nightmare

Having personal identifiable information (PII) sneak into DataComp CommonPool creates serious privacy headaches, especially since AI models can “remember” and spit out this sensitive stuff. As Hong explains, “There are many downstream models that are all trained on this exact data set,” so the problem spreads to real-world tools, opening the door to identity theft, scams, or harassment.

Experts like Abeba Birhane from Trinity College Dublin note that “any large-scale web-scraped data always contains content that shouldn’t be there,” making it normal to suck in forbidden material despite filters.

AI Data Risks:

- Data Exposure and Exploitation: With things like credit card numbers, Social Security details, and kids’ info out there, people are more vulnerable than ever. Ben Winters from the Consumer Federation of America calls this the “original sin of AI systems built off public data—it’s extractive, misleading, and dangerous,” since no one expected their info to get vacuumed up for image creators. On Twitter, privacy advocates are talking about how these leaks could lead to mental harms, like being manipulated through profiled habits or echo chambers.

- Legal and Regulatory Gaps: Laws like the EU’s GDPR and California’s CCPA let people request data deletion, but it’s pointless if models aren’t retrained afterward—that’s expensive and often skipped. Marietje Schaake from Stanford’s Cyber Policy Center highlights the lack of a U.S. federal data law, leaving folks with spotty protections. This echoes LAION-5B issues, where privacy rules struggle to define harm, risking fines, lawsuits, or shutdowns.

- Ethical and Societal Ramifications: At this size, fully cleaning the dataset is impossible, leading to bias, fake news, and lost trust in AI. X discussions show it could worsen problems like data drift or intentional poisoning, as in the 2023 LAION mess. Wider effects hit vulnerable groups hardest, with pushes for tracking data origins and consent tools to restore responsibility.

Data Privacy Compliance Strategies: Safeguarding Against AI Data Pitfalls

For businesses dipping into AI, this story is a roadmap for getting ahead on compliance when there are risks you may not be thinking about. Captain Compliance can help get you compliant and automate your privacy requirements. Read our tips below an if you’d like more help you can always book a demo with one of our team members:

- Robust Data Governance: Set up tough auditing for training data, with hands-on reviews and better PII spotting beyond auto-blurring. Tools for checking data sources and origins—as covered in recent AI ethics reports—can ensure consent and cut down on unwanted pulls.

- Legal Frameworks and User Rights: Stick to GDPR/CCPA by making data opt-outs simple and committing to retrain models after removals. In the U.S., push for national protections while running regular privacy checks to dodge “data laundering” from sets like CommonPool or LAION.

- Ethical Sourcing and Transparency: Move to synthetic or approved datasets, and be upfront about where training data comes from. As folks on X say, giving people control over their online traces—through built-in privacy in AI systems—can avoid legal and emotional fallout.

This DataComp CommonPool reveal is a stark reminder: Rushing into AI innovation without compliance can backfire. With social media buzzing from privacy pros sharing alerts to warnings about AI agent risks the push for change is getting stronger. Stay alert, or you might end up in the next big story with a big unexpected data leak.