This article aims to uncover the fundamental rights impact assessments (FRIA) with a helpful example. This is a key component in ensuring that your business’s use of data aligns with EU AI Act requirements.

Also, what is FRIA’s significance, and how does it integrate with the broader scope of data protection and artificial intelligence systems?

Whether you’re a seasoned professional or new to the realm of the EU AI Act, this guide will provide valuable insights into conducting effective impact assessments, helping you safeguard not only data but also the fundamental rights of your consumers.

Let’s dive right in.

Key Takeaways

- Fundamental Rights Impact Assessments (FRIAs) play a vital role in making sure AI systems respect people’s rights and meet ethical rules. They help businesses follow the EU’s data rules, too.

- The EU AI Act has strict guidelines for high-risk AI to protect rights. Businesses must do full checks to show they’re meeting core values and safety needs.

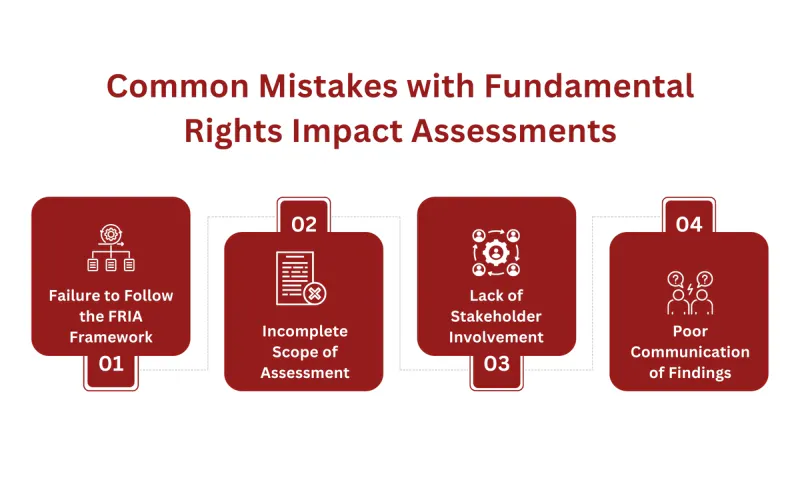

- FRIA mistakes happen when businesses don’t follow the process right, leave out stakeholders, or share findings poorly. This can lead to problems with rights and following laws.

EU AI Act Explained

The EU passed this big new AI law called the AI Act. It applies to any entity that introduces AI systems into the EU market, regardless of where they are based. The law was finalized on December 8th, 2023, after a lot of back-and-forth.

The EU AI Act’s goal is to protect people’s basic rights as new AI tech evolves. The Act is future-oriented, with built-in mechanisms for updates, acknowledging the rapid evolution of AI technologies.

Under the EU’s new AI Act, high-risk AI systems are subjected to tight controls to protect people’s rights and safety, similar to the GDPR in that respect. These risky systems have to follow strict new rules so they don’t violate fundamental rights.

Businesses developing high-risk AI will need to conduct mandatory Data Protection Impact Assessments (DPIAs) to demonstrate their systems uphold human rights and core principles. It’s a key part of the law aimed at making AI safe and ethical as it gets integrated into more parts of life.

Here are some major provisions!

High-Risk AI Systems

The definition of what constitutes a high-risk AI system was a subject of intense debate. The Act categorizes these systems based on their potential impact, requiring developers and users to adhere to strict compliance measures. This includes ensuring transparency, accountability, and respect for user rights.

Prohibited Practices

The Act also outlines specific AI practices that are deemed unacceptable and are therefore banned. This includes AI systems that manipulate human behavior, utilize subliminal techniques, or exploit vulnerabilities of specific groups of individuals, ensuring the protection of individual autonomy and preventing harm.

Remote Biometric Identification

A significant aspect of the Act is the regulation of remote biometric identification systems in publicly accessible spaces, particularly for law enforcement purposes. These systems are subject to prior judicial authorization and are limited to specific purposes, like for criminal investigation, balancing security needs with individual privacy rights.

What are Fundamental Rights Impact Assessment?

In the rapidly evolving digital world, businesses increasingly integrate advanced technologies like AI systems, requiring a focus on sensitive personal information. With these advancements, protecting people’s basic rights has become important. That’s why a Fundamental Rights Impact Assessment is so useful.

A Fundamental Rights Impact Assessment is a process aimed at identifying and evaluating how certain projects or technologies, particularly those handling data subject rights, might impact human rights. This is especially big for AI systems since sometimes businesses can accidentally violate privacy or other rights without realizing it.

The primary objective of these assessments is to mitigate risks to fundamental rights and to ensure principles of data privacy are met. It means taking a close look at how a specific project or AI system could impact rights, like data protection issues, discrimination issues, and more.

If businesses do this first, they can catch potential problems early and make informed choices to fix them. These impact assessments are necessary when dealing with high-risk AI involving personal information of EU citizens.

Also, if there’s a big power difference between the person giving data and the business using it. This imbalance could lead to a situation where the individual’s consent to data processing is only partially free and informed.

Fundamental Rights Impact Assessment Example

Performing a Fundamental Rights Impact Assessment (FRIA) is an essential step for businesses integrating AI systems, ensuring that these technologies respect fundamental rights.

This process, guided by the FRIA Framework, consists of four detailed and critical phases. Let’s explore each phase in more depth with an example of each phase. These examples are of a fictional company and are simply to give you an idea of what each phase could look like in a simple way.

Phase 1: Purpose and Roles

In this first step, businesses have to completely spell out what the AI system they’re building is meant to do. So, they need to lay out the specific goals for it, the potential impacts of this project, and how those align with their overall business plan.

They also must clearly define the roles of the people who will change the system – from the software engineers writing the code to the project leaders.

Brief Example:

Business name: BrightFutures Inc.

Project: AI-Based Career Prediction Tool

BrightFutures Inc. is working on an AI program that tries to figure out good career options for college kids depending on how they’re doing in school, what they’re into, and what jobs seem to be hot right now.

The potential impact is that it may sway college students toward a certain career pathway.

This part lays out what they’re hoping the AI can do – help students make smart choices about careers and help colleges offer classes and majors that prepare people for getting jobs. This project will involve the help of AI coders, IT professionals, career counsellors, and project leaders.

This AI will align with the business plans because the primary function of this business is to provide universities with tools to help their students.

Phase 2: Risk Identification and Assessment

When developing an AI system, businesses must rigorously conduct a data protection impact assessment to scrutinize how their system might affect people’s rights, assessing potential issues throughout its creation and deployment.

This includes assessing the sources and types of data used, the decision-making processes of the AI, and any potential biases or inaccuracies that could lead to rights infringements.

Brief Example:

BrightFutures Inc.’s Career Prediction Tool poses some potential risks that need attention. First, they collect a lot of student data to do their jobs – what if that data gets breached? Plus, the AI could potentially be biased against certain groups.

It is a possibility that some people get pushed toward careers based on being a certain gender or of a certain race, not their actual interests and talents.

Phase 3: Justifying Risks

In this phase, businesses must justify the potential risks identified in Phase 2. This involves a rigorous evaluation of whether the potential infringements on fundamental rights are justifiable in light of the AI system’s intended benefits.

Businesses must demonstrate that they have critically analyzed the trade-offs and are implementing the system in the least rights-infringing way possible.

Brief Example:

BrightFutures Inc.’s AI system has the potential to dramatically reshape how students plan their careers. Despite risks associated with data security and possible biases in decision-making, these are justified given the transformative possibilities of this technology.

The company believes that helping a student identify suitable career options effectively can have significant positive impacts on their lives, potentially improving job satisfaction rates and reducing unemployment – high societal benefits compared to its manageable risk profile.

Phase 4 – Adoption of Mitigating Measures

The last part here is coming up with and putting in place things to lessen the risks spotted earlier. This includes both technical measures, like enhancing data security, and organizational strategies, such as policy revisions and staff training.

The goal is to cut back on the bad impacts on basic rights as much as possible while keeping how well the AI works and the good things it does.

Brief Example:

To reduce the risks they’ve spotted, BrightFutures Inc. is taking a few big steps. They’re bringing in top-level encryption to keep student information safe, and their team will check the AI regularly to try to catch any biases or mistakes before they cause problems.

Additionally, information regarding student gender and race will not be collected to avoid any potential biases the AI may have or develop.

Common Mistakes with Fundamental Rights Impact Assessments

Fundamental Rights Impact Assessments (FRIAs) are crucial in ensuring that AI systems comply with ethical standards and respect human rights. However, there are several common pitfalls that organizations often encounter during this process.

Failure to Follow the FRIA Framework

One of the key mistakes is not adhering strictly to the FRIA framework. The framework is designed to guide organizations through a comprehensive evaluation of their AI systems, ensuring that all potential impacts on fundamental rights are considered.

If the business doesn’t follow it closely or ignore parts, you could end up with an insufficient assessment that misses big risks.

Incomplete Scope of Assessment

Often, assessments may not cover all aspects of the AI system’s lifecycle, leading to an incomplete understanding of its impact on fundamental rights. For example, failing to consider the full range of data sources or not evaluating the system’s long-term implications can result in unanticipated rights infringements.

Lack of Stakeholder Involvement

Another common error is the lack of stakeholder involvement. Including a diverse range of voices, especially those who are directly impacted by the AI system, is crucial for a thorough and balanced assessment. Without this involvement, assessments might miss critical perspectives or concerns, leading to biased outcomes.

Poor Communication of Findings

Even if an assessment is well-conducted, poor communication of its findings can undermine its effectiveness. Clear and transparent reporting of both the methodology used and the results obtained is essential for accountability and trust, especially when addressing potential infringements on fundamental rights.

FRIA Article Overview and Brief

The Fundamental Rights Impact Assessment (FRIA) is a critical component of the EU AI Act, designed to ensure that artificial intelligence systems respect fundamental rights throughout their development and deployment. The FRIA aims to identify, assess, and mitigate risks related to fundamental rights, such as privacy, non-discrimination, and freedom of expression. By integrating FRIA into the AI development process, organizations can proactively address potential adverse impacts on individuals and society, promoting ethical and responsible AI use.

Key elements of a Fundamental Rights Impact Assessment include:

- Risk Identification and Assessment: Identifying and evaluating potential risks to fundamental rights associated with the AI system, including biases in data sets, discriminatory outcomes, and privacy infringements.

- Mitigation Strategies: Developing and implementing strategies to mitigate identified risks, such as enhancing data quality, implementing robust data protection measures, and ensuring transparency and accountability in AI decision-making processes.

- Stakeholder Involvement: Engaging relevant stakeholders, including affected individuals, civil society organizations, and regulatory bodies, to gather diverse perspectives and ensure the AI system aligns with societal values and legal requirements.

Closing

Reflecting on FRIAs, it’s vital to explore practical applications, which our compliance services can assist with. At Captain Compliance, we are equipped to guide and support you through this complex process.

Our expertise extends to offering outsourced compliance services to ensure corporate compliance, aiding businesses in integrating AI systems ethically and legally. For businesses in the process of implementing AI systems, our compliance training can ensure alignment with ethical and legal standards.

Get in touch with us for personalized assistance and make your AI journey compliant and responsible.

FAQs

What is the Purpose of a Fundamental Rights Impact Assessment (FRIA)?

A FRIA is designed to evaluate the impact of projects, policies, or AI systems on human rights. It’s crucial to ensure that such initiatives, especially those involving AI, align with fundamental rights like privacy and non-discrimination.

Interested in a deeper understanding of FRIAs? Explore more with our detailed guide here.

When is a FRIA Necessary for a Business?

An FRIA is necessary when implementing AI systems that could potentially impact fundamental rights, particularly for high-risk AI systems in sectors where sensitive data is involved like healthcare or finance.

Wondering if your AI system needs an FRIA? Contact us for expert advice at FRIA Assessment Services.

What Are Common Mistakes to Avoid in Conducting a FRIA?

Common mistakes include not fully adhering to the FRIA framework, neglecting comprehensive assessment scope, insufficient stakeholder involvement, and poor communication of findings.

Find out how to effectively conduct a DPIA for GDPR compliance in this article.

How Can Captain Compliance Assist with FRIAs?

At Captain Compliance, we offer expert guidance and support for conducting FRIAs, ensuring your AI systems are compliant with legal and ethical standards.

Need assistance with FRIAs? Captain Compliance can help you!