Technology and AI are developing rapidly these days, raising questions about how they impact human rights. The EU’s new AI Act includes something called the Fundamental Rights Impact Assessment (FRIA).

To address these concerns simply, FRIA is a process businesses have to go through to make sure any AI systems they develop won’t violate people’s basic rights.

This article breaks down what FRIA is all about. Understanding FRIA helps businesses use AI ethically and legally by safeguarding individual freedoms. It’s a key part of wisely managing these powerful emerging technologies.

Let’s start exploring FRIA and see how it changes the way businesses use AI.

Key Takeaways

Fundamental Rights Impact Assessments (FRIAs) are essential under the EU AI Act, especially for high-risk AI systems, ensuring they respect human rights and comply with legal standards.

The FRIA Framework offers a structured, four-phase approach for businesses to assess and manage the impact of AI on human rights, from defining purposes to implementing risk mitigation measures.

Failure to conduct an FRIA when required could result in penalties of up to €35 million or 7% of total worldwide sales.

What is EU AI Act?

What is EU AI Act.jpg

The EU recently passed this new law called the AI Act. It’s the first major law regulating Artificial Intelligence tech across nearly all industries. The European Parliament agreed to it on December 8th, 2023, and they expect it to start in early 2024.

Here’s why it matters – the law applies to any business using AI that serves the EU, not just ones based there, and the main goal is to make sure AI systems are safe and don’t violate people’s rights or hurt the environment.

It sorts AI into different categories based on how risky they seem. The most high-risk ones have to follow stricter regulations, like assessing their systems to make sure they won’t harm rights and reporting to the relevant authorities if something isn’t working the way it’s supposed to.

They are very strict about AI which seems too dangerous to even allow at all.

For instance, they totally banned any AI that could secretly manipulate how people behave. Also, a no-go is AI that scrapes faces off the internet to use for facial recognition databases – that kind of data collection needs prior court approval now and can only be used for investigating crimes.

The law also touches on broader AI systems like large language models, and the specific regulations vary depending on the impact level of the system. But all AI systems now have to explain what they’re meant to do clearly.

And the ones that could have a wide-ranging impact need to go through special vetting processes and risk evaluations beforehand. There are also carve-outs in the Act that allow for safe sandbox testing zones where businesses can try developing new AI innovations before bringing a finished product to market.

This helps promote AI progress. Businesses that don’t follow the rules face fines of up to €35 million or 7% of total worldwide sales – so there’s strong motivation to comply!

What is Fundamental Rights Impact Assessment?

A Fundamental Rights Impact Assessment, or FRIA, is an important way of protecting people’s rights when AI systems are used. It carefully looks at the risks to basic rights like privacy, fairness, and free speech and suggests ways to handle those risks so AI can be used safely.

The FRIA is kind of like doing a data protection impact assessment under GDPR rules -where a close examination of AI systems, particularly high-risk ones, is conducted to assess potential harm to people’s sensitive personal information and data subject rights. It could be said it’s a DPIA for fundamental rights rather than just data protection.

Businesses must be really careful before releasing any kind of risky AI system these days. They must assess the AI first, making sure they have plans to handle potential issues that might come up.

Take facial recognition AI or systems predicting how people will act. Those can get messy really quickly. Businesses using tech like that have to ensure it does not accidentally discriminate or violate personal rights somehow.

An FRIA assessment helps catch problems early. It also lets regular people understand better how the AI makes its choices. Basically, if your AI could negatively affect someone’s life, that could be a huge liability on your behalf.

Doing an FRIA means taking a hard look at whether these systems might violate rights and figuring out ways to prevent biased outcomes. The FRIA also helps people understand how AI decisions are made.

In corporate compliance, it’s crucial to understand your AI system when making impactful decisions like loan or job application rejections, to inform the data subject about the decision’s basis, to uphold their data subject rights, and more.

When Are FRIAs Needed?

Fundamental Rights Impact Assessments (FRIAs) are required under the EU AI Act, especially for AI systems considered high-risk. These assessments are crucial for businesses using AI to ensure they respect people’s rights and follow the law.

High-Risk AI Systems are any AI systems that could seriously impact people’s health or basic human rights. For example, if your business uses AI for facial recognition, predictive policing, or other high-stakes decisions that affect lives, you need to complete an FRIA evaluation.

It’s important to note that an FRIA must be done before you start using a high-risk AI system. It’s like a pre-check to identify and manage any risks the AI might bring. This step is vital to ensure that the AI system won’t harm people’s rights or safety.

The EU AI Act advises regular FRIA updates, suggesting businesses should utilize compliance services to continually review their AI systems, ensuring ongoing safety and fairness.

FRIAs enhance AI transparency and accountability, often leveraging outsourced compliance for regulatory adherence. They require you to explain how the AI works, what decisions it makes, and how you plan to reduce any risks. This openness is good for building trust with consumers.

The EU AI Act sets high standards for AI, and following these rules is crucial for businesses to avoid legal issues and hefty fines.

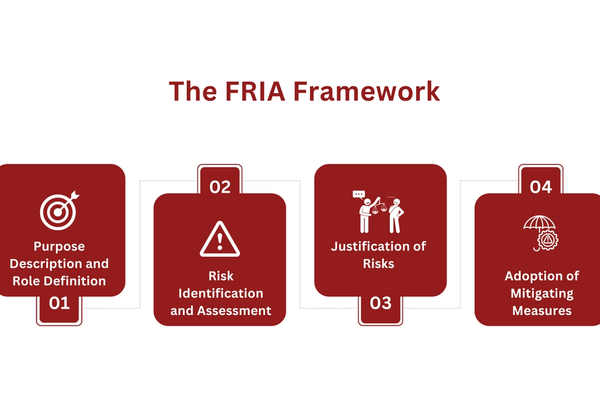

The FRIA Framework

The FRIA Framework.png

The FRIA Framework is meant to help businesses make sure their AI systems don’t violate basic human rights. It gives businesses a step-by-step way to look at their AI and figure out if it could hurt privacy rights, fairness, or freedom of expression.

FRIA has businesses go through their AI system piece by piece to see what impact it could have. This lets them find possible issues and come up with ways to handle them. Doing this upfront helps stop AI from accidentally harming people down the road. Following the FRIA process is essential if we want AI to make life better and not worse.

It keeps the focus on human rights through the whole AI design and use lifecycle. No one wants their privacy violated or to be treated unfairly by AI. FRIA aims to prevent those types of scenarios.

Phase 1 – Purpose Description and Role Definition

The first phase of the FRIA Framework is about the businesses being super clear on what the AI system is trying to do. The business must spell out the issues it’s supposed to fix or the goals it’s meant to hit, whether that’s helping out with some public service project or reducing traffic.

This phase also involves a critical assessment of potential impacts on fundamental rights. Moreover, it requires defining the roles and responsibilities of all parties involved in the system’s lifecycle – from design and development to deployment and evaluation.

This includes internal stakeholders (project leaders, data protection officers, and technical staff) and external parties. The business must ensure a balanced and competent team, possibly including stakeholders most likely to be affected by the AI system.

Phase 2 – Risk Identification and Assessment

Phase 2 focuses on spotting and weighing the dangers to basic rights across the phases of making the AI system. This means closely looking at the technical and organizational processes of the system to make sure they match up with the business’s goals and respect people’s rights.

The business really has to think hard here about the system’s algorithms, how it fits together, and how its outputs sway decision-making. Businesses must scrutinize various factors like data selection, potential biases, and acceptable error rates (false positives/negatives), understanding that risk is a function of impact and likelihood of occurrence.

Phase 3 – Justification of Risks

The third phase talks about evaluating if using AI is the best option here. Businesses need to think hard about whether the AI they want to use could hurt people’s rights or not. Even if the AI seems useful, that doesn’t make it okay for the AI to treat some groups unfairly or invade privacy.

Businesses should look closely at other options that might work well but don’t have as big of risks, and they should also make sure they truly need all the data or capabilities that the AI would use. It’s not enough to just say that AI is useful. They have to weigh if that usefulness is more important than protecting people.

It’s tricky to balance an AI’s benefits against potential downsides. But businesses have to try, especially when fundamental rights are at stake. If the risks seem too high compared to the advantages, then they should pick a different option that’s more respectful of people.

Assessing suitability and necessity isn’t always straightforward, but evidence and ongoing reviews can help guide responsible choices. The key is a holistic outlook focused on avoiding harm.

Phase 4 – Adoption of Mitigating Measures

The last part of making an AI system that doesn’t cause problems is figuring out how to handle any risks you find. Even if the risks seem small, you still must do everything in your power so people’s rights don’t get violated.

This phase demands a nuanced approach, tailoring mitigation measures to specific contexts and the severity of potential impacts. After implementing these measures, businesses should revisit the earlier phases to reassess and realign their strategies, ensuring a continuous loop of improvement and adjustment.

In cases where risks cannot be adequately mitigated, businesses should reconsider the continuation of the project.

Example FRIA

Let’s create an example of a business conducting a Fundamental Rights Impact Assessment (FRIA) according to the FRIA Framework. This company came up with a smart system that uses AI to analyze all kinds of data about cars, roads, buses, and stuff to figure out where traffic jams might happen.

Phase 1: Purpose Description and Role Definition

In Phase 1, the business clearly defines the purpose of its AI system:

Primary Objective: To reduce traffic congestion in urban areas, thereby decreasing travel time and reducing pollution

Secondary Objectives: To assist in emergency vehicle routing and to provide data-driven insights for urban planning.

The business identifies roles and responsibilities:

AI Development Team: Focuses on the development and refinement of AI algorithms for traffic analysis and prediction.

Data Analysts: Handle the collection and processing of traffic data from various sources.

Legal and Compliance Officers: Ensure adherence to data protection laws and ethical guidelines.

Stakeholder Engagement Team: Communicates with city officials, emergency services, and the public to gather feedback and concerns.

Phase 2: Risk Identification and Assessment

The business assesses several risks:

Privacy Risks: Potential for misuse of personal data from GPS and mobile devices.

Bias in Data: Risk of the AI system reinforcing existing traffic biases, potentially neglecting certain areas or demographics.

Decision-making Reliability: The reliability of traffic predictions and the potential consequences of incorrect recommendations.

Phase 3: Justification of Risks

The business evaluates the proportionality of risks:

Privacy Concerns: The business justifies the use of data by implementing strict anonymization protocols and obtaining user consent. It argues that the overall societal benefit of reduced traffic outweighs these concerns.

Bias Mitigation: They acknowledge the risk of data bias and commit to using diverse data sources to ensure equitable traffic management across all urban areas.

Reliability of AI Decisions: The business emphasizes its commitment to regular algorithm testing and updates to maintain high decision-making accuracy.

Phase 4: Risk Mitigation Measures

To mitigate identified risks, the business adopts several measures:

Data Privacy and Security: Implements advanced data anonymization techniques and secure data storage practices.

Algorithmic Auditing: Regularly audits AI algorithms to detect and address any biases or inaccuracies.

Emergency Protocols: Establishes protocols for emergency situations where AI recommendations may be overridden by human controllers.

Continuous Review: The business commits to a cyclic review process, revisiting the FRIA phases periodically to align with new data, technological advancements, and evolving societal expectations.

Closing

So we just finished talking about those Fundamental Rights Impact Tests (FRIAs) and the EU AI Act. It’s a significant consideration for businesses to understand and integrate into their operations. You’re probably wondering what your next steps should be and how to make sure your business stays compliant.

This is where we at Captain Compliance can help. We’re here to guide you through the process from start to finish. We aim to simplify understanding FRIAs and completing them properly so that your business not only follows the law but uses AI in an ethical, fair way.

Remember – doing these FRIAs isn’t just about checking boxes; it’s about building trust and using AI responsibly. If you need a hand meeting all the requirements, touch base with us at Captain Compliance.

Get in touch with us to shape a future where technology and human rights exist in harmony!

FAQs

What Exactly is a Fundamental Rights Impact Assessment (FRIA)?

A FRIA is a process to check if AI systems respect people’s basic rights, like privacy and fairness. It’s especially important for AI, which could have a big impact on people’s lives.

Need more details on FRIAs? Get in touch with us for a deeper understanding.

When is a FRIA Required Under the EU AI Act?

A FRIA is needed mainly for high-risk AI systems, like those used in healthcare or law enforcement. It’s a way to make sure these systems are safe and fair before they’re used.

Curious about whether your AI system needs an FRIA? Our experts are here to help you!

How Does a FRIA Promote AI Fairness and Transparency?

A Fundamental Rights Impact Assessment ensures AI systems, like those for facial recognition, are fair and don’t invade privacy. It helps explain AI decisions, making them more transparent and trustworthy.

For more on AI fairness, check out our insights on GDPR compliance here.

What Are the Penalties for Not Complying with the EU AI Act?

Not following the EU AI Act can lead to big fines, up to 35 million euros or 7% of global sales. It’s crucial for businesses to comply to avoid these penalties.