In a move that’s set to sharpen the EU’s oversight of artificial intelligence, the European Commission has unveiled a dedicated whistleblower platform under the AI Act—launching just last month. This isn’t a generic tip line; it’s a fortified channel for insiders, employees, or watchdogs to flag suspected violations that could jeopardize health, fundamental rights, or public trust in AI systems. As the AI Act’s enforcement gears up, this tool positions the EU AI Office as the go-to hub for early warnings, blending innovation encouragement with ironclad accountability.

For AI developers, deployers, and compliance teams across the bloc (and beyond, thanks to extraterritorial reach), this is more than a reporting portal—it’s a compliance accelerant. Spot a high-risk AI model skirting safety checks? Blow the whistle anonymously, and help steer the sector toward trustworthy tech. At Captain Compliance, we’re already fielding queries on how this fits into your AI governance playbook. Here’s the full scoop: From mechanics to must-dos, and why your org should prep now.

The Launch: Timing It with the AI Act’s Rollout

The tool drops amid the AI Act’s phased activation—general obligations kick in February 2026, with high-risk systems under the microscope by 2027. The Commission, via its EU AI Office, is wasting no time building an ecosystem for vigilance. This platform, hosted on a secure third-party system (IntegrityLine), opens the floodgates for proactive reporting, ensuring violations don’t fester into scandals.

Purpose at its core: Empower individuals to “contribute to the early detection of potential violations,” as the Commission puts it. Whether it’s a biased facial recognition tool in hiring or an untested deepfake generator threatening elections, the tool targets risks to “health, safety, fundamental rights, democracy, and the rule of law.” It’s a nod to the Act’s risk-based tiers: Prohibited (e.g., social scoring), high-risk (e.g., medical diagnostics), and general-purpose AI like ChatGPT models.

Broader context: This aligns with the EU’s whistleblower directive (2019), mandating safe channels in orgs over 50 staff. But here, it’s pan-EU, centralized for efficiency—think a one-stop shop versus fragmented national lines.

How the Tool Works: Secure, Simple, and Multilingual

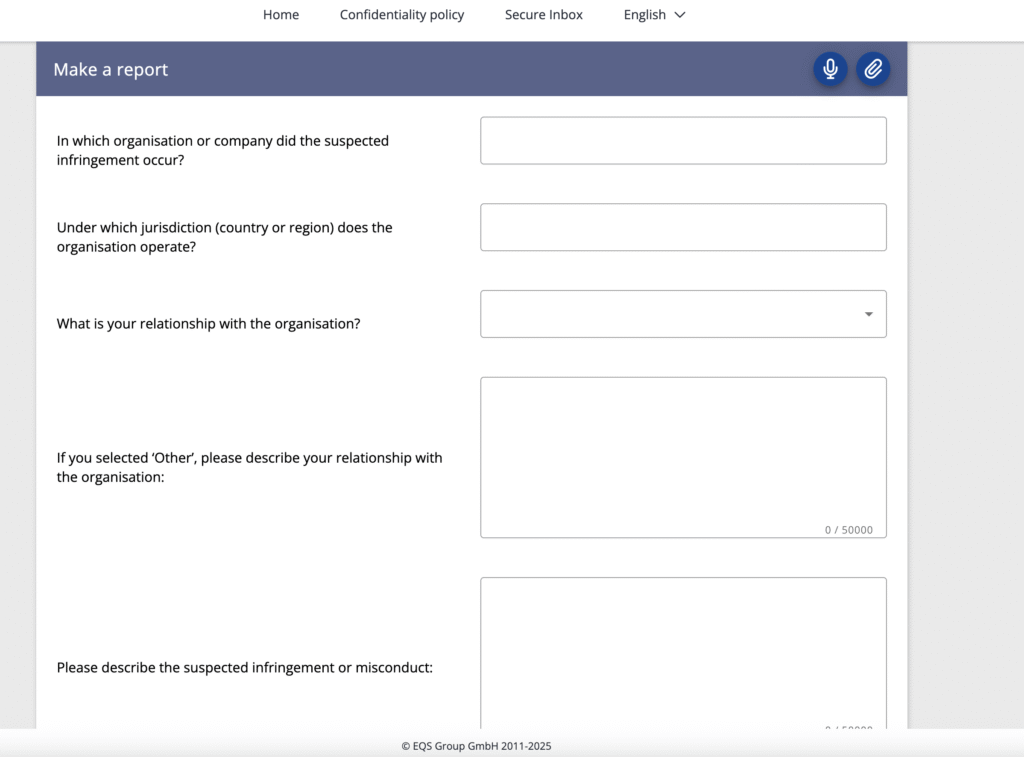

Access it at ai-act-whistleblower.integrityline.app—no app download, just a web form. Users can submit in any of the 24 EU official languages, in formats from text to attachments (docs, screenshots). The process:

- Submit Anonymously: Detail the breach—system description, risk level, evidence—without revealing your identity upfront.

- Secure Transmission: Certified encryption locks it down, routing straight to the AI Office for triage.

- Follow-Up Loop: Get a case ID for updates; the Office can pose clarifying questions via the portal, all while preserving confidentiality.

- Escalation Path: Valid reports trigger investigations, potential fines (up to 7% of global turnover), or enforcement actions.

FAQs at the site cover “What can be reported?” (e.g., non-compliance with transparency duties) and “Who can report?” (anyone, inside or out). No thresholds— even hunches count if substantiated.

Tying It to the AI Act: From Reporting to Real Accountability

The AI Act isn’t a blunt ban; it’s a graduated framework promoting “safe and transparent AI development.” Whistleblowers are the canaries in the coal mine, feeding intel to the AI Office’s enforcement arsenal. High-risk examples: An unassessed credit-scoring AI discriminating by gender, or a general-purpose model like Grok spewing misinformation without safeguards.

Integration perks: Reports inform the Office’s annual risk assessments and code-of-practice development for GPAI. It’s symbiotic—whistleblowers get closure (via updates), the Commission gets actionable data to refine regs. Post-Brexit, UK firms take note: The Act’s global pull means extraterritorial bites for EU-impacting AI.

Built-In Safeguards: Anonymity and Protection Front and Center

Trust is the tool’s bedrock. “Highest level of confidentiality and data protection” via end-to-end encryption ensures reports can’t be traced or leaked. Anonymity? Ironclad—you control what (if any) details to share. Follow-ups happen through the portal, no emails or calls that could expose you.

Legal shields: Aligns with the Whistleblower Directive, offering retaliation bans and support in member states. The Commission pledges non-retaliation, even if a report fizzles. For EU staff or contractors, it’s layered with internal channels for double protection.

Implications for Businesses: A Catalyst for Internal Vigilance

This tool flips the script—from fearing leaks to fearing silence. AI firms: Expect a uptick in internal tips, as employees know external backups exist. Positive spin? It fosters a “speak-up” culture, catching issues pre-fine. But risks loom: Frivolous reports could clog lines, or bad-faith ones spark witch hunts.

Market ripple: Investors now prize “whistleblower-ready” orgs—think robust hotlines as ESG checkboxes. For multinationals, harmonize with US SEC rules or UK’s PIDA for seamless compliance.

Your AI Act Action Plan: 6 Steps to Whistleblower-Proof Your Ops

Don’t wait for a report to drop—proactively embed this into your framework:

- Map High-Risk AI: Inventory systems against Act tiers; flag gaps in conformity assessments.

- Launch Internal Channels: Mirror the tool with anonymous portals; train on “reportable” red flags.

- Ethics Training Overhaul: Quarterly sessions on AI risks—make reporting routine, not radical.

- Monitor the Tool: Set alerts for Office guidance; simulate reports in tabletop drills.

- Document Defenses: Keep audit trails for compliance claims—transparency thwarts bogus tips.

- Global Sync: Align with NIS2 or DORA for cyber-AI overlaps; consult for non-EU tweaks.

Tools like our AI Compliance Dashboard automate mappings, cutting prep time in half.

Forward Momentum: Innovation with Integrity

The Commission’s launch underscores the AI Act’s ethos: Foster cutting-edge tech while fortifying rights. As Wiewiórowski might echo from TechSonar, this tool shepherds AI toward accountability. For forward-leaning firms, it’s an opportunity—lead with ethics, and let whistleblowers be your secret weapon for safer systems.