If your startup handles personal data especially sensitive media or identifiers you must bake privacy into the architecture from day one. Otherwise, a single flaw can drag you offline, invite regulatory enforcement, and turn users into plaintiffs. Below we’ll explore what happened to Neon, how it worked, where it went wrong, and why Captain Compliance helps prevent these privacy disasters.

Inside Neon: How the App Worked and Why It Exploded in Popularity

Before its sudden shutdown, Neon positioned itself as the next viral social-tech phenomenon. The concept was deceptively simple: record your phone calls, and get paid for allowing those recordings to be used in AI model training and analytics. Neon claimed that call data was “anonymized” before being sold to language-model developers who wanted to improve speech recognition and conversational AI.

Neon’s slick marketing promised transparency and control users could “opt in” to share specific calls and earn micro-payments per minute. The company’s founder, Alex Kiam, promoted the product as a way for ordinary users to “monetize their voice data.” Within weeks of launch, TikTok influencers showcased their Neon dashboards, boasting about earning cash from conversations with friends, partners, or even customer-service reps.

The app quickly climbed the App Store charts, generating more than 75,000 downloads in a single day. It integrated directly with phone systems to automatically record incoming and outgoing calls. Each call was stored, transcribed via automated speech recognition, and uploaded to Neon’s servers for matching with AI research clients. Users could review transcripts, view their payout, and toggle sharing permissions.

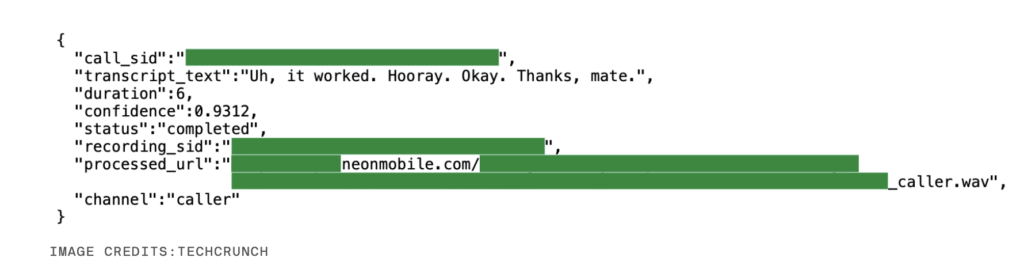

But beneath the glossy interface, Neon lacked the very controls it claimed to respect. The company had no proper API authentication barriers between users’ datasets. Its storage buckets were misconfigured, allowing anyone with an access token to view not only their own calls, but also calls from other accounts. Security researchers found that with minimal manipulation of API requests, they could retrieve phone numbers, full audio files, and text transcripts from unrelated users all unencrypted.

In privacy terms, Neon had violated nearly every principle of Privacy by Design — no data minimization, no adequate consent validation, and no strict purpose limitation. Worse, many of the recordings included both parties’ voices, even when the second participant had never consented to being recorded or having their conversation sold to AI labs.

After TechCrunch contacted the company with its findings, Neon abruptly suspended operations in late September. Its website went offline, and app users received a vague push notification promising “temporary maintenance for enhanced security.” But the damage was already done. Users flooded social media, sharing screenshots of leaked transcripts and demanding answers. Within 48 hours, Neon had gone from viral success to reputational collapse.

The Tea App Breach: A Parallel Lesson

Earlier in 2025, the Tea App — a women-only dating and safety network — suffered a major data breach. Hackers accessed a database containing roughly 72,000 images, including selfies and government ID photos used for verification. Many leaked messages discussed sensitive personal topics, from reproductive health to infidelity, underscoring the emotional toll of privacy failures. The Tea breach happened again a 2nd time and this reinforced that even mission-driven startups aren’t immune if they fail to secure user data from day one and leaked 1.1 million records.

Together, Neon and Tea demonstrate a pattern: viral growth can’t compensate for weak privacy architecture. Startups that treat data governance as an afterthought often collapse faster than they rose and that they need to integrate Captain Compliance’s privacy software to protect against total loss.

Why Regulators Will (and Should) Act

U.S. Spotlight: CCPA & FTC

- CCPA/CPRA: Unauthorized disclosure of personal information (especially call data) can trigger state AG action and private lawsuits.

- FTC: The agency may treat Neon’s promises of “security” and “anonymity” as deceptive under Section 5 of the FTC Act.

- FTC Reasonable Security: The Commission routinely fines startups that fail to use basic access controls or encryption.

International Reach: GDPR & ePrivacy

- For EU users, call transcripts and metadata qualify as “personal data.”

- GDPR Article 32 requires strong technical measures — which Neon lacked.

- ePrivacy rules on communications confidentiality could apply to call audio itself.

The likely outcome: investigations, fines, class-action suits, and a regulatory wake-up call to the broader app ecosystem.

How Captain Compliance Prevents a Neon-Scale Disaster

| Feature | How It Helps | Relevance to Neon Scenario |

|---|---|---|

| Data Classification | Automatically detects and tags sensitive data like call transcripts, recordings, and identifiers. | Would have flagged call data as high-risk and required encryption. |

| API Risk Assessment | Scans APIs for exposed endpoints, weak tokens, and permission flaws. | Would have detected cross-user data access instantly. |

| Vendor Oversight | Audits third-party processors and AI partners for compliance posture. | Ensures downstream recipients uphold identical privacy standards. |

| Consent & Purpose Logging | Maintains granular logs of user permissions and data uses. | Prevents overreach and proves lawful basis for processing. |

| DSAR Automation | Streamlines access and deletion requests, ensuring transparency post-incident. | Helps restore trust and meet statutory deadlines. |

With these controls embedded from the start, startups can innovate responsibly and avoid the existential threat of privacy-related shutdowns.

Compliance Checklist: Privacy by Design for Startups

- Map Data Flows: Understand where data moves, who accesses it, and how long it’s retained.

- Classify Data: Identify sensitive types like voice, transcripts, or biometric IDs.

- Apply Least Privilege: Ensure APIs return only what’s necessary for each session.

- Encrypt Everything: Data in transit and at rest must be protected with modern standards.

- Vet Vendors: Ask for a trust center and if they have SOC 2 or ISO 27001. Evidence from partners handling your user data.

- Consent Management: Use explicit, revocable consents tied to time-limited purposes. Trust businesses that use a Captain Compliance Consent Banner.

- Automate DSARs: Build deletion and access workflows from day one.

- Run Privacy Drills: Test your breach-response plan quarterly.

- Review Before Release: Every new feature passes a privacy gate.

Risk Analysis & Enforcement Exposure

| Risk Vector | Consequence | Exposure | Mitigation |

|---|---|---|---|

| Regulatory Fines | CCPA/FTC/GDPR penalties up to millions. | High | Documented controls, cooperation, prompt remediation. |

| Class-Action Lawsuits | Emotional-distress and negligence claims. | High | Clear consent terms, insurance, swift response. |

| Mandatory Audits | Ongoing third-party oversight imposed by regulators. | Medium | Independent audits and certifications. |

| Reputation Damage | User exodus, loss of investors. | Very High | Transparent communications and recovery strategy. |

| Operational Disruption | Forced shutdown or feature removal. | Medium-High | Incident resilience planning. |

Build Trust Before It’s Too Late

Neon’s story isn’t just about one app it’s a cautionary tale for every startup chasing viral growth. Privacy isn’t a box to check after launch; it’s the foundation of brand longevity and if you look at Apple then that should be proof that privacy is a competitive advantage. In an era where AI models hunger for human data, users are no longer passive participants they’re stakeholders in how their voices, images, and identities are handled.

With Captain Compliance, privacy by design becomes achievable even for lean startups. Our tools for data classification, vendor oversight, API risk assessment, and DSAR automation create an environment where innovation thrives securely.

Protect your innovation before regulators protect your users for you. Build compliance in from day one because in the data economy, trust is the ultimate currency.