What emerges is a set of findings that are simultaneously reassuring and challenging. On one hand, privacy programs are delivering measurable ROI almost universally. On the other hand, AI is stress-testing the governance muscle behind those programs—especially around data quality, tagging, ownership, and third-party accountability. Put plainly: organizations have learned how to “do privacy” in the GDPR era, but AI is forcing them to “do governance” as an enterprise capability. The winners will be those that turn governance into practice at the point of data use, not just policy on paper.

Privacy Pays Off

- Privacy pays: 99% of organizations report measurable benefits from privacy investments.

- Innovation leads the ROI stack: 96% report agility and innovation benefits from appropriate data controls.

- AI expands privacy scope: 90% say their privacy programs expanded due to AI (47% “significantly,” 43% “somewhat”).

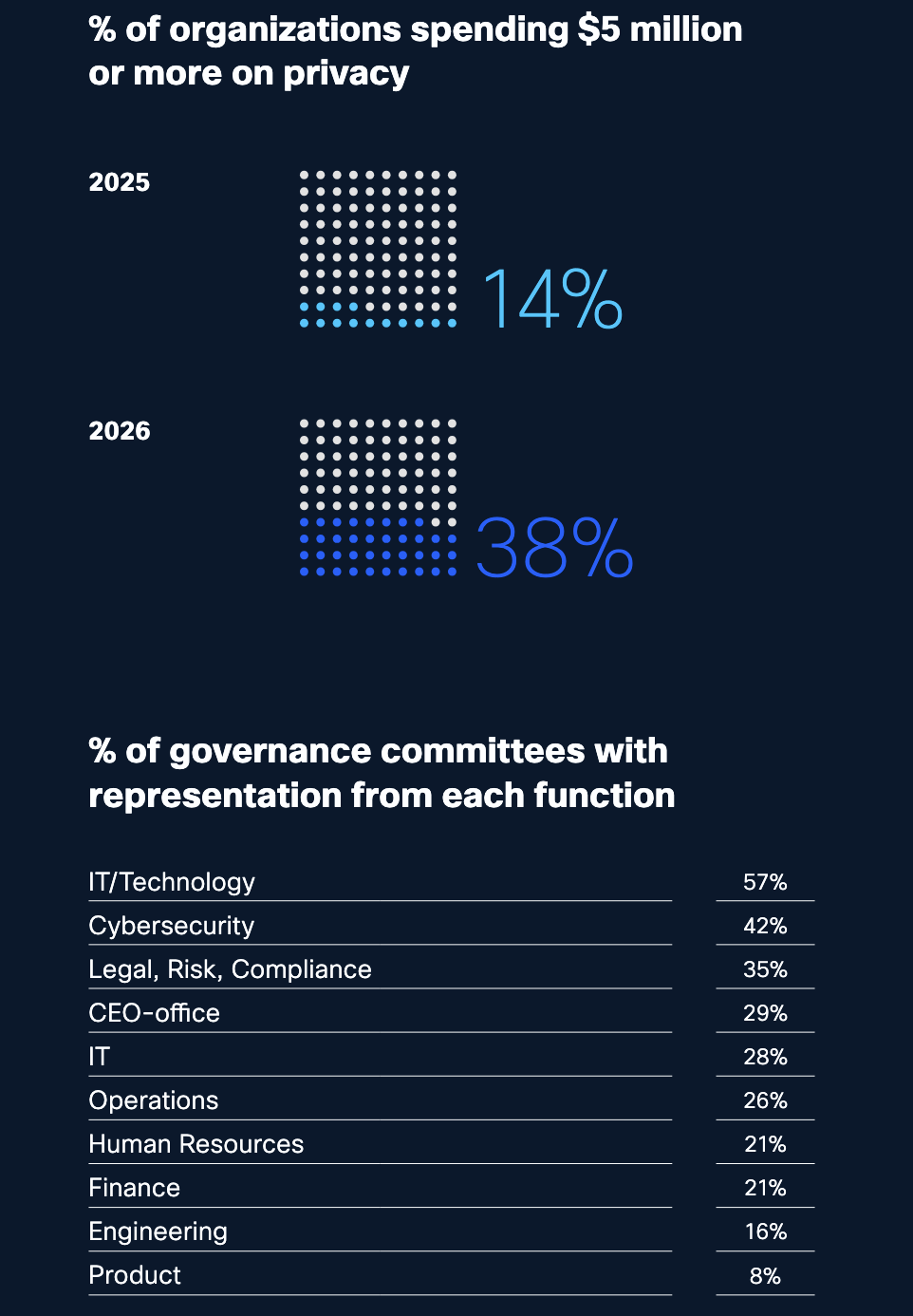

- Spending is rising: 43% increased privacy spending in the past year; 38% spent $5M+ on privacy in the last 12 months (up from 14% reported in early 2025).

- Localization is a pressure point: 85% say data localization adds cost, complexity, and risk to cross-border service delivery; global companies feel it more than single-market players (88% vs. 79%).

- Governance maturity lags: Only 12% describe AI governance committees as mature and proactive.

- Transparency drives trust: 46% rank clear communication about how data is collected and used as the top action to build customer confidence, ahead of compliance (18%) and breach avoidance (14%).

- Data discipline is strained: 65% struggle to access relevant, high-quality data efficiently; 77% identify IP protection of AI datasets as a top concern; 70% acknowledge risk exposure from using proprietary or customer data for AI training.

- Tagging is widespread but uneven: 66% have data tagging systems in place; 16% do not; 18% are developing. Where tagging exists, only about half describe it as comprehensive.

- Vendor governance is improving, contracts lag: 81% say GenAI providers are transparent about data practices, but only 55% require contractual terms defining data ownership, responsibility, and liability.

The rest of this write-up unpacks these statistics section-by-section, pulling out what they imply about program design, governance models, operational risk, and the near-term priorities that distinguish mature organizations from those still treating privacy as a “function” rather than an enterprise-wide capability.

Methodology and why the dataset matters

Most privacy reports struggle with either scope (too narrow geographically or sectorally) or signal quality (too small to infer meaningful differences). Cisco’s 2026 benchmark is notable because it attempts breadth across markets and industries while also emphasizing statistical validity. The respondents are professionals with data privacy responsibilities, which matters: these are not general population perceptions, but operator-level viewpoints grounded in actual program execution and constraints.

Cisco also flags an important caveat: because this year’s sample is nearly double previous editions, some year-over-year comparisons may show “minor variances” from sampling differences. However, Cisco provides a practical interpretation rule: changes of 2% or more are likely true changes. That framing helps distinguish trend from fluctuation.

For privacy leaders and product teams, the value of this dataset is not that it provides universal truth. It’s that it surfaces where the majority of serious operators are moving: what they believe is working, where they are spending, what is breaking under AI pressure, and what trust signals customers are rewarding.

Privacy pays: measurable ROI is no longer theoretical

Cisco’s first theme is the most straightforward: privacy investment is paying off in measurable ways. The headline is simple—99% report at least one tangible benefit—but the shape of the benefits is more instructive. Historically, privacy ROI has been framed as “risk reduction” or “regulatory avoidance.” In Cisco’s 2026 findings, that is still present, but it is not the top story. The top story is speed: faster innovation and smoother business execution because data is better controlled, better cataloged, and less likely to trigger customer objections at the point of sale.

Cisco presents a benefits breakdown that reads like a business scorecard rather than a compliance checklist:

| Benefit area from privacy-related investments | % of organizations reporting this benefit |

|---|---|

| Enabling agility and innovation from appropriate data controls | 96% |

| Reducing sales delays or friction due to customer/prospect privacy concerns | 95% |

| Mitigating losses from data breaches | 95% |

| Achieving operational efficiency from organized and cataloged data | 95% |

| Building customer loyalty and trust | 95% |

| Making the organization more attractive to investors | 94% |

Two implications matter here. First, privacy is being tied directly to revenue motion: if 95% of organizations see reduced sales friction due to privacy concerns, that means privacy is increasingly a gating factor in procurement. Second, operational efficiency shows up alongside trust and breach mitigation. This reflects a reality many teams discover once they mature beyond first-wave compliance: privacy forces data hygiene, and data hygiene reduces internal waste. Better catalogs, clearer access controls, and disciplined retention policies make systems easier to run and change.

Cisco’s narrative suggests a larger inflection: privacy maturity correlates with broader organizational performance—resilience, retention, and speed to market—especially in environments where AI projects require fast, legitimate access to high-quality data.

The shifting paradigm: AI expands privacy’s mandate

If the first half of the report is about ROI, the second half is about why that ROI is becoming harder to sustain without governance upgrades. AI is the forcing function. Cisco reports that 90% of organizations say their privacy program has expanded due to AI, with the breakdown showing that for nearly half, the expansion is significant rather than marginal:

| How AI affected the scope/mandate of privacy programs | % of organizations |

|---|---|

| Significantly expanded the scope or mandate | 47% |

| Somewhat expanded the scope or mandate | 43% |

| Has not changed the scope or mandate | 9% |

| Unsure | 1% |

This is not a subtle signal. It suggests privacy and governance functions are now being pulled upstream into AI initiatives at design-time, not merely called in at launch-time. That change is rational: AI systems can create new privacy risks even without “new data collection,” because models can infer sensitive traits, repurpose information in unexpected ways, and drive automated decisions that change how individuals are treated. In practice, AI turns data governance into the critical path: without clean, classified, permissioned data, AI teams either stall—or they move fast and create exposure.

Cisco also quantifies the spending response. Forty-three percent increased privacy spending over the past year, and 93% plan to allocate more resources to at least one area of privacy and data governance over the next two years. The language in the report is important: these resources are spread across automation of compliance tools, using AI to monitor adherence to requirements, and compliance with new and changing regulations. In other words, organizations aren’t only spending to “do more of the same.” They are spending to industrialize governance and monitoring at AI scale.

The most dramatic budget signal is the jump in organizations spending $5M or more on privacy in the past 12 months: 38% in 2026 versus 14% in early 2025. This shift suggests privacy programs are being reclassified as strategic infrastructure, not discretionary overhead. In mature organizations, privacy spending is increasingly justified the same way cybersecurity spending is justified: you fund the control plane because everything depends on it.

The governance gap: ambition outpaces readiness

The study’s most consequential finding may be the mismatch between governance adoption and governance maturity. Cisco reports that three in four organizations have a dedicated AI governance committee, yet only 12% describe those committees as “mature and proactive.” That delta explains why so many organizations feel AI pressure without feeling in control of AI outcomes.

Cisco also surfaces the benefits organizations attribute to AI governance when it is done “by design” rather than bolted on. The top-rated benefits cluster around values and quality as much as compliance:

| Perceived benefits of AI governance | % selecting |

|---|---|

| Achieving corporate values (social responsibility, ethical conduct) | 85% |

| Improving product quality (performance and reliability of AI products) | 85% |

| Preparing for regulation | 84% |

| Enhancing employee relations (promoting an ethical culture) | 83% |

| Building trust with customers, partners, and regulators | 79% |

This is revealing because it shows governance is being understood less as “approval bureaucracy” and more as a quality and trust engine. When governance is mature, it reduces late-stage rework, clarifies acceptable use, and makes data and model decisions legible to internal stakeholders and external counterparties.

Cisco also reports on functional representation within governance committees. The distribution underscores why many committees are not “mature”: they are often weighted toward IT and cybersecurity rather than balanced cross-functionally, and product or engineering may be underrepresented. In practice, governance maturity is usually a function of three things: authority (can the committee enforce decisions), integration (is governance embedded into workflows), and accountability (are responsibilities and liabilities defined internally and contractually).

Beyond compliance: transparency becomes the primary trust lever

Cisco’s next pivot is arguably the most pragmatic insight in the report: transparency is the strongest driver of customer trust, outranking both “being compliant” and “not getting breached.” The data point is sharp: 46% of organizations say the most effective action to build customer confidence is providing clear information about what data is collected and how it is used. Compliance with laws comes in at 18%, and avoiding data breaches at 14%.

The implication is subtle but important. Customers don’t experience “compliance” directly; they experience clarity, control, and predictability. A company can be fully compliant and still feel opaque. Conversely, a company can build confidence by making data practices understandable, even to non-experts, and by giving users meaningful visibility and control.

Cisco pairs the trust ranking with evidence that transparency demand is rising and that organizations are responding in concrete channels. Eighty-five percent report that customer demand for transparency has increased over the past three years. More than half (55%) now offer interactive dashboards enabling users to view or control their data in real time. Half (50%) embed transparency directly into contracts so customers and partners can clearly understand how AI systems use information.

This is the blueprint for “trust at scale” in AI-driven services: dashboards and contracts become the new privacy policy. They translate governance decisions into user-visible artifacts and partner-visible commitments. For privacy leaders, this has an operational consequence: transparency must be built from accurate data inventories, strong classification and tagging, and reliable tracking of purposes and access. Without those foundations, transparency becomes aspirational marketing, and that can backfire.

Cisco adds a striking reinforcement: 97% report their transparency efforts with customers are proving effective. Taken at face value, this suggests transparency is not only demanded—it is rewarded. In markets where AI adoption hinges on trust, organizations that make data use legible gain a competitive advantage.

For teams operationalizing transparency across websites and customer touchpoints, a modern consent and preference stack is often the starting line.

Global pressure points: localization costs rise as trust globalizes

The benchmark treats data localization as a major operational constraint, not a philosophical debate. Eighty-five percent of organizations say localization adds cost, complexity, and risk to cross-border service delivery. Cisco further reports that global companies are more likely than single-market companies to see significant operational challenges (88% versus 79%), which aligns with lived reality: localization multiplies infrastructure footprints, vendor relationships, legal analyses, and operational pathways for data processing.

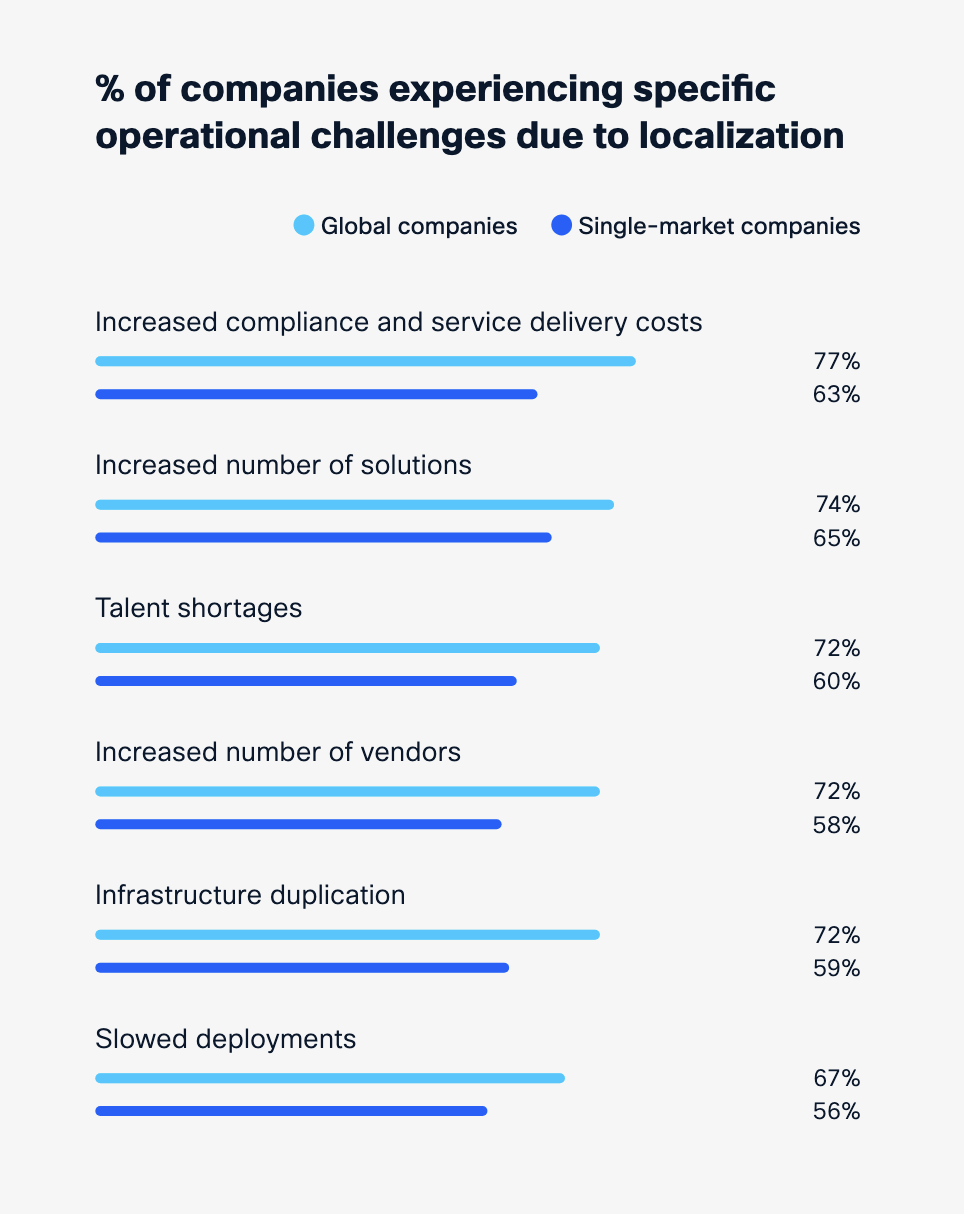

Cisco itemizes the operational challenges companies experience due to localization, and the results show that localization impacts the entire delivery stack: compliance, procurement, hiring, architecture, and deployment speed. Global companies are consistently more impacted than single-market firms.

| Operational challenge attributed to localization | Global companies | Single-market companies |

|---|---|---|

| Increased compliance and service delivery costs | 77% | 63% |

| Increased number of solutions | 74% | 65% |

| Talent shortages | 72% | 60% |

| Increased number of vendors | 72% | 58% |

| Infrastructure duplication | 72% | 59% |

| Slowed deployments | 67% | 56% |

One of the most interesting trend signals Cisco calls out is a gradual erosion of the belief that “locally stored data is inherently more secure.” That belief fell from 90% in 2025 to 86% in 2026. This decline matters because it hints at a more mature security posture: organizations are increasingly distinguishing between jurisdictional constraints (where data can be stored) and actual security outcomes (how well data is protected). In practice, large-scale providers may deliver stronger standardized controls, while localization can create fragmentation that increases risk if it forces teams into weaker local infrastructure or increases architectural complexity.

Cisco also notes that global companies prefer technology partners matching their footprint, with 82% believing global-scale providers are better at managing cross-border data flows. The strategic takeaway is not “global is always better,” but that governance and security capabilities at scale are becoming a differentiator in vendor selection—especially when organizations are trying to maintain consistent controls across regions.

Data discipline under pressure: quality, tagging, and IP protection

AI turns long-standing data problems into immediate blockers. The report captures this pressure in three stats that should make any AI program leader pause:

65% struggle to access relevant, high-quality data efficiently; 77% identify IP protection of AI datasets as a top concern; and 70% acknowledge risk exposure from using proprietary or customer data for AI training.

These are not “future risks.” They are present-tense governance realities. If organizations can’t efficiently access high-quality data, they either slow down AI initiatives or they bypass governance in ways that create risk. If IP protection is a top concern, it signals growing awareness that AI datasets are strategic assets—valuable and sensitive, often containing proprietary business information, trade secrets, or competitive insight. And if 70% acknowledge risk exposure from using proprietary or customer data in training, it suggests organizations understand the danger of accidental leakage, inappropriate reuse, or contractual conflicts around data rights.

Cisco’s framing is that privacy’s center of gravity is shifting. The question is no longer only “is data protected,” but “is data understood, structured, and governed well enough to support complex AI systems.” That is a major redefinition. It effectively merges classic privacy principles (purpose limitation, minimization, access control) with enterprise data management disciplines (cataloging, classification, lineage, stewardship).

The report treats data tagging as a key enabling control and quantifies where organizations stand. Sixty-six percent have data tagging systems in place, 18% are currently developing them, and 16% have none. But the sophistication breakdown shows the maturity gap: among those with tagging, only 51% describe the system as comprehensive; 33% report limited tagging; 10% rely on customer-identified tagging; and small fractions fall into “in development,” “manual,” or “ad hoc” approaches.

The governance interpretation is straightforward: partial or fragmented tagging creates blind spots. Blind spots make it difficult to enforce permissions, track purpose, implement retention, or produce credible transparency. In AI contexts, blind spots are amplified because training and inference pipelines can move quickly, pulling in data from many sources. The more automated and agentic systems become, the more governance needs to follow data use continuously rather than focusing solely on upfront policy.

Rising GenAI and agentic demand: governance shifts closer to practice

One of Cisco’s more operational insights is that organizations are moving away from blunt “ban-first” approaches to GenAI, and toward controls that operate at the point of interaction. The report includes a year-over-year shift showing a sharp decline in organizations using outright bans on AI usage and strict limits on data entry into GenAI tools. The narrative explains why: blanket prohibitions are difficult to enforce and can be misaligned with how AI is used day to day. As GenAI becomes embedded in workflows, the control model has to change.

Cisco’s language suggests governance is moving “from data sourcing to data use.” That is a meaningful reframing. In older governance models, the focus is on what data you have and whether it’s allowed to be stored. In newer models, the focus is on how data is being used in context: who is prompting, what data is being referenced, what outputs are being produced, and what safeguards catch problems before they become incidents.

This is also where the report introduces “agentic” systems: AI that can operate with higher autonomy, potentially executing sequences of actions across systems. Cisco argues that as systems become more autonomous, the surface area for data access and decision-making expands—and governance must extend beyond individual prompts to continuous workflows. That implies a need for technical controls (monitoring, guardrails, approval thresholds, audits) and human controls (training, escalation paths, designated accountability).

In governance terms, this is a shift from static compliance to dynamic oversight: controls that are embedded into the operating fabric of work. It’s the same evolution cybersecurity has gone through (from perimeter defense to continuous detection and response), now mirrored in privacy and data governance for AI.

Vendor governance: transparency is rising, but accountability still needs contracts

As organizations rely more on external AI providers, vendor governance becomes a core element of trust. Cisco reports that 81% say their GenAI providers are transparent about data practices. That sounds encouraging, and it likely reflects market pressure: buyers demand clarity on how prompts, inputs, outputs, telemetry, and fine-tuning data are used and retained.

But the contract layer tells a more cautious story. Only 55% require contractual terms that define data ownership, responsibility, and liability. This gap is critical because transparency without enforceable accountability can fail under stress. When something goes wrong—misuse, leakage, cross-border conflicts, retention issues—the ability to remediate depends on what the contract actually obligates the vendor to do, how liabilities are allocated, and what audit and control rights the customer has.

Cisco also reports that 79% say GenAI providers are willing to negotiate contracts or tool configurations to limit how data is used. The attitudinal breakdown indicates most respondents agree (or strongly agree) with that claim, suggesting the market is maturing toward more configurable data usage terms rather than one-size-fits-all policies. That is meaningful progress, but it raises the bar on procurement discipline: organizations must be capable of negotiating and then enforcing these terms through implementation.

The report also notes that 96% say external, independent third-party privacy certifications play an important role in vendor selection. This suggests that privacy credentials are becoming a procurement filter, similar to how SOC 2 reports became a baseline expectation in SaaS. However, certifications should be treated as necessary-but-not-sufficient: they provide signal, not guarantees, and must be paired with contractual clarity and technical verification.

The convergence era: privacy, AI, and governance become one operating model

Cisco closes with a convergence thesis: privacy, data, AI governance, and cybersecurity are increasingly intertwined around the shared requirement of trust. This is not just a structural change—Cisco calls it cultural. Privacy and governance can no longer be confined to specialized teams; they must become shared organizational values.

That cultural shift is not a slogan. It changes how organizations design processes. If governance is shared, then product teams and engineering teams must be co-owners of privacy and accountability outcomes. If governance is shared, then training and safeguards must operate at the workforce level, not only at the policy level. If governance is shared, then vendor accountability must be enforced through procurement, implementation, monitoring, and ongoing audits—not delegated to one team.

Cisco’s stance is pragmatic: organizations best positioned to succeed are those embedding trust into decision-making, data governance, and AI deployment, turning trust from a defensive necessity into a driver of innovation and growth. The implied operational model is a unified control plane—one that governs data classification, access, purpose, retention, transparency artifacts, and vendor contracts alongside AI-specific controls such as evaluation, monitoring, and human-in-the-loop accountability.

Benchmark Suggestions from Cisco’s Report

Cisco’s 2026 benchmark makes a clear argument backed by unusually strong consensus metrics: privacy creates measurable business value, but AI is raising the complexity of sustaining that value without upgrading governance maturity. The report’s most compelling through-line is that the privacy foundations built in the GDPR era are essential but no longer sufficient. AI expands the scope of privacy and governance, increasing demand for high-quality, well-tagged data while raising the stakes for IP protection and contractual clarity.

The following recommendations consolidate Cisco’s signals into a practical agenda. Each item is designed to be implementable, not aspirational: it focuses on decisions and controls that reduce ambiguity, limit exposure, and accelerate responsible AI adoption.

- Build a single, empowered governance body that is cross-functional by design.

Mature governance requires authority and balanced representation. Many organizations have committees, but few describe them as proactive. The goal is not more meetings; it is clearer decision rights, faster escalation, and consistent enforcement across legal, security, product, engineering, and operations. - Shift governance to the point of data use, not just data storage.

As GenAI becomes embedded in workflows and as agentic systems expand, controls must follow data through prompts, retrieval, tool calls, and outputs. That means monitoring, guardrails, approvals, and auditable workflows that operate continuously. - Invest in data discipline: tagging, lineage, and access controls that scale with AI.

With 65% reporting difficulty accessing high-quality data efficiently, data discipline is now a competitive constraint. Tagging should move from partial to comprehensive, enabling transparency, permissioning, and retention enforcement. - Treat IP protection and data rights as first-class governance problems.

With 77% concerned about AI dataset IP protection and 70% acknowledging training-related exposure, organizations should implement strict policies and technical controls governing what data can be used in training and under what rights, including redaction, transformation, and contractual safeguards. - Engineer transparency as a product feature and a contract feature.

Transparency is the top trust driver. Operationalize it through clear disclosures, dashboards where appropriate, and contractual language that makes data use understandable for customers and partners. - Close the vendor accountability gap with enforceable contracts and configurable controls.

Transparency claims are helpful, but accountability requires contractual terms defining ownership, responsibility, and liability, plus verification via audits and monitoring. If providers are willing to negotiate configurations, use that leverage to reduce exposure. - Plan for localization complexity without assuming local equals safer.

Localization adds cost, vendors, duplication, and delays—especially for global organizations. Treat localization as an architecture and governance challenge, and evaluate security outcomes realistically rather than relying on location as a proxy for protection.

For organizations turning these recommendations into execution, the practical question becomes: what is the system that makes governance real at scale? That typically includes (a) data inventories and classification, (b) consent and preference controls, (c) DSAR automation and auditability, (d) contract workflows and vendor tracking, and (e) AI-specific guardrails and monitoring. In the privacy operations layer, platforms like ours are designed to operationalize consent, transparency, and compliance workflows in ways that support broader governance maturity—especially as organizations expand privacy mandates under AI pressure.

The Cisco benchmark ultimately frames privacy’s next era as a governance era: success depends on turning trust into an engineered outcome. The organizations that lead will not be those reacting piecemeal to new rules or new AI capabilities, but those deliberately building unified governance models that keep pace with the speed, scale, and complexity of modern data use.