In late 2025, Israel’s Privacy Protection Authority (PPA) issued a new, comprehensive guide designed to help organizations build artificial intelligence systems that safeguard personal data through Privacy-Enhancing Technologies (PETs). The document, which reflects a maturing regulatory framework, aims to operationalize privacy protection throughout the AI lifecycle — from data preparation and model training to inference and deployment. Israel joins a growing set of jurisdictions emphasizing that responsible AI development must integrate privacy by design and systematic risk management.

The guide presents the core principles behind PETs and explains how these technologies can be combined with organizational safeguards to reduce privacy risk while enabling innovation. It is explicitly intended for data protection officers (DPOs), legal counsel, product and project managers, and AI engineers responsible for assessing and implementing privacy risk mitigation strategies in AI projects.

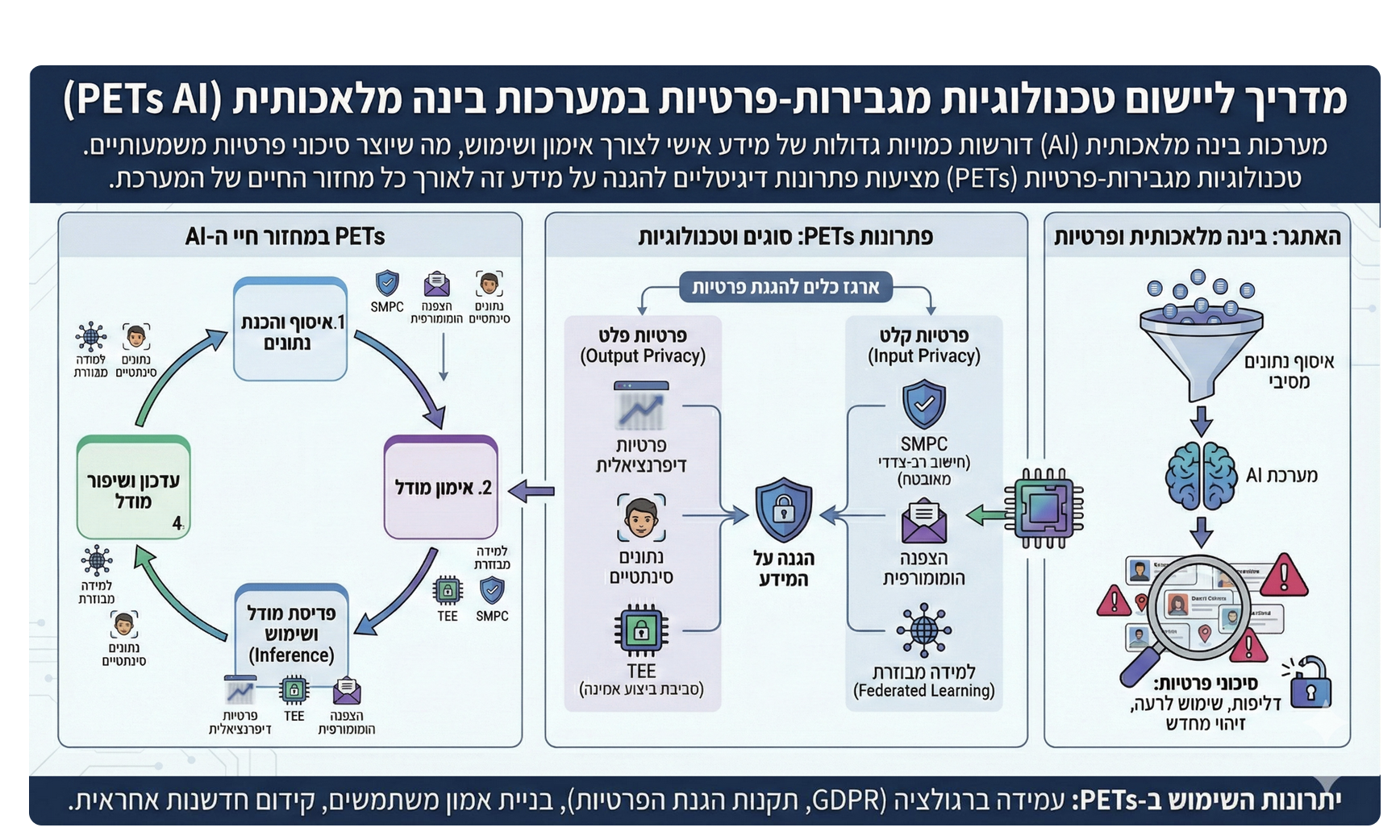

What Are Privacy-Enhancing Technologies and Why They Matter for AI

Privacy-Enhancing Technologies (PETs) are a class of digital tools designed to minimize the exposure of personal data during collection, processing, and model training, while maximizing data utility for legitimate purposes. Unlike traditional privacy compliance measures, which focus on legal obligations and policies, PETs embed privacy protection directly into the technical architecture of systems and workflows.

At its core, PETs help organizations achieve three objectives:

- Reduce personal data visibility — through techniques like anonymization or synthetic data that prevent identification of individuals while preserving analytical value.

- Secure data during processing — through methods such as federated learning or homomorphic encryption that allow computation on encrypted or distributed datasets.

- Increase control and transparency — through tools that log access, enforce permissions, and enable auditability throughout the AI lifecycle.

These technologies complement legal and organizational controls — such as Privacy Impact Assessments (PIAs), accountability frameworks, and contractual safeguards — and help ensure that personal information is protected even as it is used to train and operate AI systems.

Overview of the Israeli Guide on PETs for AI Systems

The PPA guide serves three primary purposes:

- Explain how PETs function and why they are critical in AI projects;

- Provide a taxonomy of PETs, grouped by their role in the data lifecycle;

- Offer practical guidance on selecting and implementing PETs in real-world AI systems.

The guide emphasizes that privacy protection is not a one-off activity. Instead, it must be embedded into system design, starting from strategy and architectural planning through to deployment and ongoing operation. This reflects a broader international trend toward “privacy by design” and aligns with similar principles in global frameworks like the European Union’s GDPR.

Categorizing PETs by Stage of AI Development

The guide breaks down PETs into three major functional categories based on where and how they operate in the AI development lifecycle:

1. Data Collection and Preparation

This category includes techniques that obfuscate or simplify personal information before it enters training datasets. Examples include:

- Anonymization: Removing or altering personal identifiers so that individuals cannot be re-identified.

- Synthetic Data: Artificially generated data that retains statistical properties of real data without exposing actual personal details.

- Noise Addition: Introducing controlled randomness into the data to reduce identifiability while preserving meaningful patterns.

2. Secure Processing and Model Training

In this phase, sensitive data stays protected even as models are trained or evaluated. Techniques under this category include:

- Federated Learning: Models are trained collaboratively across distributed datasets without centralizing the raw data.

- Homomorphic Encryption: Enables computation on encrypted data, ensuring that sensitive information remains ciphertext during processing.

- Secure Multi-Party Computation (MPC): Allows multiple parties to compute a function jointly without revealing their individual inputs.

3. Access Control and Transparency

These technologies focus on limiting and recording how data is accessed and used:

- Personal Data Stores: Repositories that grant individuals control over who accesses their information and for what purpose.

- Documentation and Transparency Tools: Audit logs and dashboards that detail data access, transformations, and lineage.

The guide underscores that no single technique is sufficient on its own. Instead, organizations should adopt a layered approach, combining multiple PETs to address different risk vectors.

Alignment with Israeli Privacy Law

Israel’s Privacy Protection Law was comprehensively updated in 2024 with Amendment No. 13, and this significantly expanded the definition of “personal data” to include any information that can reasonably identify an individual directly or indirectly. PETs help organizations meet legal obligations by reducing the identifiability of data. Where obfuscation or encryption effectively prevents re-identification, certain obligations under the law may not apply, significantly reducing compliance risk.

This intersection of law and technology is important: while PETs can mitigate risks, processing of personal data — even using PETs — remains subject to full legal requirements unless the data no longer meets the legal definition of personal information. This means organizations must still conduct proper impact assessments, maintain lawful bases for processing, and meet transparency and accountability obligations.

Global Context: Why This Guide Matters

Israel’s release of practical guidance for PETs in AI reflects a broader global shift toward embedding privacy directly into technology design rather than treating it as an afterthought. Regulators in other jurisdictions, including the European Union and OECD member states, have also published frameworks and recommendations that elevate PETs as key tools for trustworthy AI.:contentReference[oaicite:9]{index=9}

For example, international policy organizations characterize PETs as essential for enabling collaboration on sensitive datasets without compromising individual privacy — a capability that is increasingly prioritized as AI systems scale in scope and data usage.:contentReference[oaicite:10]{index=10}

AI Governance Perspectives: Practical Action for Risk Leaders

For AI governance professionals — including DPOs, Chief Privacy Officers, and AI risk leads Israel’s PETs guide offers actionable direction on how to operationalize privacy protections within AI programs. The following principles should inform governance frameworks:

1. Build Privacy Risk Assessments into the AI Lifecycle

Privacy risk assessments should not be one-off exercises. They should be integrated at key stages: requirements gathering, system architecture, data selection, model training, and post-deployment monitoring. PETs should be evaluated as part of these assessments, with risk matrices documenting how each technology reduces specific threat vectors.

2. Prioritize Explainability and Auditability

PETs should be paired with documentation that enables verification and audit. This includes access controls, cryptographic logs, model documentation, and evidence of how PETs limit exposure of personal information. Auditable controls help satisfy both legal compliance and internal governance standards.

3. Test PET Efficacy Against Realistic Use Cases

Many PETs introduce tradeoffs between utility and protection. Governance teams should establish red-team exercises and adversarial testing to evaluate whether PET implementations hold up against realistic threats, including re-identification attempts and inference attacks.

4. Embed Accountability Across Teams

Operational governance cannot reside solely with privacy or legal teams. AI developers, product managers, and security engineers must be accountable for aligning PET integration with organizational risk policies. Documentation and training are essential to bridge functional silos.

Comparing Regulatory Approaches: U.S., EU, and Israel

| Aspect | United States | European Union | Israel |

|---|---|---|---|

| Legal Basis | Fragmented state and federal privacy laws; sector-specific AI guidance emerging. | GDPR privacy protections with proposed AI Act covering systemic AI risks. | Privacy Protection Law amended comprehensively; PETs guide supports regulator interpretation for AI. |

| Privacy Requirements | State laws (e.g., California) mandate opt-out rights and security controls; no unified federal framework yet. | Broad data subject rights, accountability, DPIAs, and AI Act risk tiering. | Heavy emphasis on lawful bases, transparency, impact assessments, and PET usage as risk mitigation. |

| AI Regulation Focus | Sector-by-sector guidance; enforcement actions focus on misuse and harmful outputs rather than prescriptive controls. | Comprehensive risk-based regulation; systemic AI risks and pre-deployment assessments required. | Integrates privacy into AI governance; emphasizes PETs to complement legal obligations. |

| Operational Expectations | Documentation and transparency encouraged, less prescriptive; governance varies widely. | Explicit requirements for documentation, human oversight, and safety controls under the AI Act proposals. | PPA guidance indicates PETs should be part of integrated privacy risk controls with documented evidence. |

Challenges and Limitations of PET Implementation

While PETs are powerful, they come with tradeoffs. Techniques like synthetic data and differential privacy may affect model fidelity or introduce bias if not properly tuned. Secure computation methods such as homomorphic encryption often increase computational load and may require specialized infrastructure. Effective deployment also depends on high-quality governance, skilled personnel, and ongoing monitoring — not just technology adoption.

Despite these challenges, the guide encourages organizations to adopt PETs as part of a layered privacy strategy — balancing legal compliance, risk tolerance, and business objectives while continually evaluating performance and risk.

—

Israeli PETs Privacy-Preserving AI

Israel’s PETs for AI guidance marks a significant milestone in the intersection of privacy regulation and artificial intelligence governance. By emphasizing technologies that reduce personal data exposure and embed protection into system architecture, the guide aligns with a global move toward privacy by design and accountable AI. For privacy professionals, AI developers, and organizational leaders, the practical frameworks and governance principles outlined in the guide provide a roadmap for balancing innovation with rights protection in an era of pervasive AI use.