The European Data Protection Supervisor’s (EDPS) Guidance on Risk Management of Artificial Intelligence Systems, released on November 11, 2025, serves as a critical roadmap for EU Institutions, Bodies, Offices, and Agencies (EUIs) grappling with the integration of AI into personal data processing. This document doesn’t just outline risks—it demands a proactive, accountable approach to embedding data protection principles into every stage of AI’s lifecycle. While AI promises transformative efficiency, it also amplifies threats to fundamental rights like privacy, potentially at scale and speed unseen before. Research suggests that without rigorous safeguards, AI could perpetuate biases, leak sensitive data, or erode trust in public institutions. The evidence leans toward viewing this guidance not as a checklist, but as a foundational framework that acknowledges the complexity of AI supply chains and the non-exhaustive nature of its recommendations.

Key Takeaways

- Risk-Centric Approach: Grounded in ISO 31000:2018, the guidance emphasizes identifying, analyzing, and treating risks to data subjects’ rights, focusing on principles like fairness, accuracy, data minimization, security, and data subjects’ rights.

- AI Lifecycle Integration: Risks emerge across phases from inception to retirement; procurement is highlighted as a pivotal early intervention point to avoid downstream issues.

- Interpretability as Foundation: Unexplainable “black box” AI systems undermine compliance—technical measures like LIME and SHAP are essential for auditing and trust-building.

- Balanced Innovation and Protection: While technical controls are detailed, controllers must conduct tailored assessments; the guidance complements but doesn’t replace DPIAs or AI Act obligations.

- Practical Tools: Annexes provide metrics benchmarks, risk overviews, and phase-specific checklists to operationalize the framework.

Why This Matters Now

In an era where AI systems process vast personal datasets—from predictive analytics in HR to generative tools in policy drafting—EUIs face heightened scrutiny. The guidance arrives amid evolving regulations like the AI Act, urging a “data protection by design” ethos. It seems likely that adopting these strategies could mitigate controversies around AI-driven decisions, fostering empathy for affected individuals while enabling ethical innovation. Yet, challenges persist: complex models may resist full interpretability, and resource constraints could hinder implementation.

Core Principles at a Glance

The guidance dissects five key EUDPR principles through AI-specific lenses:

| Principle | Key Risks | Example Mitigation |

|---|---|---|

| Fairness | Bias in data/training, overfitting, algorithmic/interpretation bias | Quality audits, regularization techniques |

| Accuracy | Inaccurate outputs, data drift, unclear provider info | Hyperparameter optimization, drift detection |

| Data Minimization | Indiscriminate collection/storage | Data sampling, pseudonymization |

| Security | Output disclosure, breaches, API leaks | Differential privacy, encryption, RBAC |

| Data Subjects’ Rights | Incomplete identification/rectification/erasure | Machine unlearning, metadata tracking |

This snapshot underscores the interconnectedness: a failure in fairness often cascades into accuracy issues, amplifying security vulnerabilities.

Comprehensive Analysis: Unpacking the EDPS AI Risk Management Guidance

In the rapidly evolving landscape of artificial intelligence, where algorithms increasingly mediate decisions affecting millions, the European Data Protection Supervisor (EDPS) has issued a landmark document: Guidance for Risk Management of Artificial Intelligence Systems, dated November 11, 2025. This 50+ page tome is more than a regulatory nudge—it’s a clarion call for EU Institutions, Bodies, Offices, and Agencies (EUIs) to weave data protection into the very fabric of AI deployment. Drawing on the EU Data Protection Regulation (EUDPR, Regulation 2018/1725), the guidance applies a structured risk management lens to AI’s unique challenges, from opaque “black boxes” to sprawling supply chains. As we dissect its layers, we’ll explore not just what it says, but why it resonates in 2025’s AI-saturated world, where generative models hallucinate facts and facial recognition systems falter on diverse faces. This analysis expands on the document’s core elements, interweaving practical implications, real-world analogies, and forward-looking critiques to render it actionable for policymakers, developers, and ethicists alike.

Executive Summary: Setting the Stage for Accountable AI

The guidance opens with a sobering executive summary, framing AI’s dual-edged sword: immense potential shadowed by existential risks to privacy and non-discrimination. Under EUDPR’s accountability principle (Articles 4(2) and 71(4)), EUIs—as controllers—must identify, mitigate, and demonstrate mitigation of these risks. The document zeroes in on technical controls for five principles: fairness, accuracy, data minimization, security, and data subjects’ rights. It’s explicitly non-exhaustive, urging EUIs to tailor assessments via Data Protection Impact Assessments (DPIAs). Notably, it positions itself as a complement to prior EDPS tools, like the June 2024 Orientations on Generative AI, broadening scope to all AI while narrowing to technical mitigations.

Why does this matter? In a post-AI Act era, where the EDPS dons a dual hat as data protector and market surveiller, this guidance bridges silos. It warns of AI’s “intricate supply chains,” where multiple actors process data variably, echoing supply chain vulnerabilities in global tech. For EUIs, ignoring this could erode public trust—imagine an AI-flagged fraud alert wrongly targeting marginalized communities due to biased training data. The summary’s humility (“refrains from ranking likelihood and severity”) invites nuance, acknowledging context-specificity over one-size-fits-all edicts.

Introduction: Objectives, Scope, and Audience

Section 1 lays foundational stones. The objective? Guide EUIs in spotting and quelling risks to fundamental rights during AI’s procurement, development, and deployment. It complements the Accountability Toolkit’s Part II on DPIAs and generative AI orientations, but carves a niche: broader (all AI types) yet narrower (technical strategies only). This isn’t a compliance bible—it’s an analytical scaffold for risk assessment, sans legal absolutes.

Scope is risk-defined per ISO 31000:2018—sources (AI-personal data processing), events (rights impediments), consequences (harm), and controls (mitigations). Events are pegged to EUDPR non-compliance, proxying broader rights protection. The audience spans AI ecosystem players: developers, data scientists, IT pros, DPOs, and coordinators— a reminder that data protection is everyone’s remit.

Implications abound. By focusing on “technical in nature” controls, the guidance democratizes risk management, empowering non-lawyers with tools like regularization algorithms. Yet, it diplomatically notes limitations: no risk ranking, as that’s EUI turf. This fosters empathy for resource-strapped teams, while hedging against over-reliance—controllers must own full EUDPR compliance.

Risk Management Methodology: A Blueprint from ISO 31000

Section 2 anchors in ISO 31000:2018’s iterative process: establish context, identify risks, analyze (likelihood x impact via matrices), evaluate against appetite, treat (avoid/mitigate/transfer/accept), monitor, and review. Figures 1 and 2 visualize assessment and qualitative matrices, grading likelihood/impact from “Very Low” to “Very High.”

The guidance spotlights identification and treatment, deeming analysis/evaluation too context-bound. For EUIs, this means building risk registers per AI system, potentially triggering EDPS prior consultation (EUDPR Article 40) if mitigations falter. In practice, this could manifest as phased pilots: assess a chatbot’s bias risk pre-full rollout, iterating via user feedback loops.

Critically, it highlights AI’s opacity—adverse impacts “not yet fully assessed”—urging beyond EUDPR proxies to holistic rights scans. This section’s strength? Its accessibility: matrices demystify “Risk = Likelihood x Impact,” enabling even junior analysts to contribute. A potential controversy: does it underplay socio-economic biases in risk appetite, favoring technical over cultural shifts?

The AI Lifecycle: From Cradle to Grave

Section 3 demystifies AI’s arc, distinguishing systems (autonomous, output-generating per AI Act Article 3(1)) from models (parameter-capturing patterns). Figure 3 maps nine phases: Inception/Analysis, Data Acquisition/Preparation, Development, Verification/Validation, Deployment, Operation/Monitoring, Continuous Validation, Re-evaluation, Retirement. Each harbors risks—e.g., data prep for bias, operation for drift.

Procurement (per Financial Regulation 2024/2509) parallels: Publication/Transparency, Call for Tenders (specs including risk guarantees), Selection/Award, Execution (mirroring development phases). Blue boxes flag risk phases, stressing early tender scrutiny to preempt issues.

This lifecycle isn’t linear—it’s cyclical, with continuous learning demanding perpetual validation. Analogies help: think data prep as curating a library (quality over quantity), development as authoring (bugs as plot holes). For EUIs, procurement’s emphasis is gold: embed DPO input in tenders to avoid “buyer’s remorse” with biased off-the-shelf models. Challenges? Resource-intensive re-evaluations, especially for legacy systems.

Interpretability and Explainability: The Black Box Bane

Section 4 elevates these as “sine qua non,” distinct from transparency (user-facing). Interpretability: human grasp of model mechanics (e.g., linear regression’s clear formula). Explainability: post-hoc clarifications (e.g., LIME heatmaps on CNNs). Risk 4.1.1: Uninterpretable systems hobble trust, bias detection, audits.

Measures: Comprehensive docs (architecture, data sources, biases), SHAP/LIME, statistical analyses. Applies across selection to re-evaluation. In editorial lens, this counters AI hype—without explainability, EUIs risk “algorithmic authoritarianism,” where decisions evade scrutiny. Example: A hiring AI favoring certain demographics; LIME reveals feature weights, enabling debias. Empathetically, it acknowledges expert vs. user divides, pushing intuitive tools.

Risks to Core Data Protection Principles: A Granular Breakdown

Section 5 is the guidance’s engine, dissecting principles with risks, descriptions, phases, and measures. It’s exhaustive yet pragmatic, blending theory with tech.

5.1 Fairness: Combating Bias at Every Turn

Fairness (Charter Article 8(2), EUDPR Articles 4(1)(a)/71(1)(a)) demands non-discriminatory, expected processing. AI magnifies biases—historical (e.g., male CEOs), sampling (urban-heavy health data), human (developer prejudices).

- 5.1.1: Data Quality Bias—Garbage in, garbage out; mislabels perpetuate errors. Phases: Data prep, verification, re-eval. Measures: Quality policies (cleaning/labeling), audits, outlier detection (KNN), verification (fuzzy matching).

- 5.1.2: Training Data Bias—Non-representative sets skew outputs. Measures: Distribution matching, bias-free features, engineering (reweighting), audits, re-weighting.

- 5.1.3: Overfitting—Memorizing noise harms generalization. Measures: Early stopping, simplification, L1/L2 regularization, dropout.

- 5.1.4: Algorithmic Bias—Design flaws discriminate. Measures: Diverse teams, debiasing (adversarial training), audits.

- 5.1.5: Interpretation Bias—Misreading outputs unfair. Measures: Explainable tools, bias audits in explanations, stakeholder training.

This cascade—from data to interpretation—illustrates fairness’ breadth. Real-world: COMPAS recidivism tool’s racial skew; mitigations like audits could have intervened. Controversy: Metrics like demographic parity may conflict with accuracy—trade-offs demand ethical deliberation.

5.2 Accuracy: Beyond Stats to Legal Fidelity

Legal accuracy (EUDPR 4(1)(d)): Factually correct, up-to-date data. Vs. statistical (output correctness). Risks:

- 5.2.1/3: Inaccurate Outputs—Hallucinations, poor data. Phases: Data prep to re-eval. Measures: Quality data, edge cases, diversity, HPO, human oversight, neurosymbolic alternatives.

- Data Drift Example—Economic shifts invalidate models. Measures: Drift detection, quality monitoring, retraining, feedback.

- 5.2.2/5: Provider Opacity—Vague tenders hide flaws. Measures: Docs on specs, transparency, cybersecurity, governance; metrics (FPR/FNR parity, calibration, EOP).

Annex 1’s benchmarks (GLUE, ImageNet) aid validation. Implication: Drift’s subtlety—stable models falter in crises—urges vigilant monitoring, empathetic to dynamic EU policy needs.

5.3 Data Minimization: Less is More

EUDPR 4(1)(c): Necessary only. AI’s data hunger tempts excess. Risk 5.3.1: Indiscriminate hoarding. Phases: Data prep, verification, re-eval. Measures: Pre-assess utility, sampling, anonymization/pseudonymization.

Balancing act: Enough for accuracy, not more. Example: Subset training cuts breach surfaces. This principle’s empathy? Reduces individual exposure, countering surveillance fears.

5.4 Security: Fortifying the AI Fortress

EUDPR 4(1)(f): Integrity/confidentiality via measures. AI-specific: Poisoning, inversion. Risks:

- 5.4.1: Output Disclosure—Regurgitation via attacks. Phases: Operation onward. Measures: Minimization, perturbation (generalization/aggregation/differential privacy), synthetics, MEMFREE decoding.

- 5.4.2: Storage/Breaches—Vast data invites compromise. Phases: Broad. Measures: Anonymization, encryption, synthetics, secure coding, MFA.

- 5.4.3: API Leaks—Exposed endpoints. Phase: Operation. Measures: MFA/RBAC, throttling, HTTPS, logging/SIEM, audits, secure dev, patching.

AI’s novelty: Models as assets (stealable). Editorial note: Synthetics shine but risk new attacks—layered defenses essential. Controversy: Balancing usability with paranoia-level security.

5.5 Data Subjects’ Rights: Empowering the Individual

Focus: Access/rectification/erasure challenges in AI. Risks:

- 5.5.1: Incomplete Identification—Hard to locate in models. Phases: Development, operation. Measures: Metadata, retrieval tools (MemHunter), structured schemas.

- 5.5.2: Incomplete Rectification/Erasure—Memorization resists. Measures: Retrieval tools, machine unlearning (exact/approximate), output filtering.

Unlearning’s promise: “Forget” without retrain. Yet, deep nets’ entanglement complicates—e.g., parameters encoding multiples. This section humanizes: Rights aren’t abstract; they’re Jane Doe’s data purge.

Conclusion: Toward a Rights-Resilient AI Future

Section 6 synthesizes: AI risks demand systematic, cultural shifts. Recap: Methodology (Ch. 2), lifecycle/procurement (Ch. 3), cross-cuts (Ch. 4), principles/risks (Ch. 5). Non-exhaustive, adaptable—controllers own assessments. Call: Accountability culture, continuous monitoring. EUIs can lead: Innovate responsibly, anchor in rights.

Forward: As AI evolves (e.g., multimodal), update frameworks. Empathy: For data subjects, whose lives hang on these mitigations.

Annexes: Operational Arsenal

Annex 1: Metrics and Benchmarks

Diverse metrics: NLP (ROUGE, BLEU, GLUE/SuperGLUE, HELM, MMLU); Vision (ImageNet, CIFAR, MNIST). Table expands:

| Type | Benchmark | Description |

|---|---|---|

| NLP/LLM | ROUGE | Evaluates summaries/translations via precision/recall. |

| NLP/LLM | BLEU | Machine translation via n-gram precision. |

| NLP/LLM | GLUE/SuperGLUE | Tasks from classification to reasoning. |

| NLP/LLM | HELM | Assesses accuracy, fairness, toxicity across scenarios. |

| NLP/LLM | MMLU/Pro | Multitask knowledge/reasoning questions. |

| Image Recognition | ImageNet | 14M+ labeled images for object recognition. |

| Image Recognition | CIFAR-10/100 | 60K small images, 10/100 classes. |

| Image Recognition | MNIST | 70K handwritten digits. |

| CV | COCO | Object detection/segmentation/captioning. |

| Speech | LibriSpeech | English audiobook transcription. |

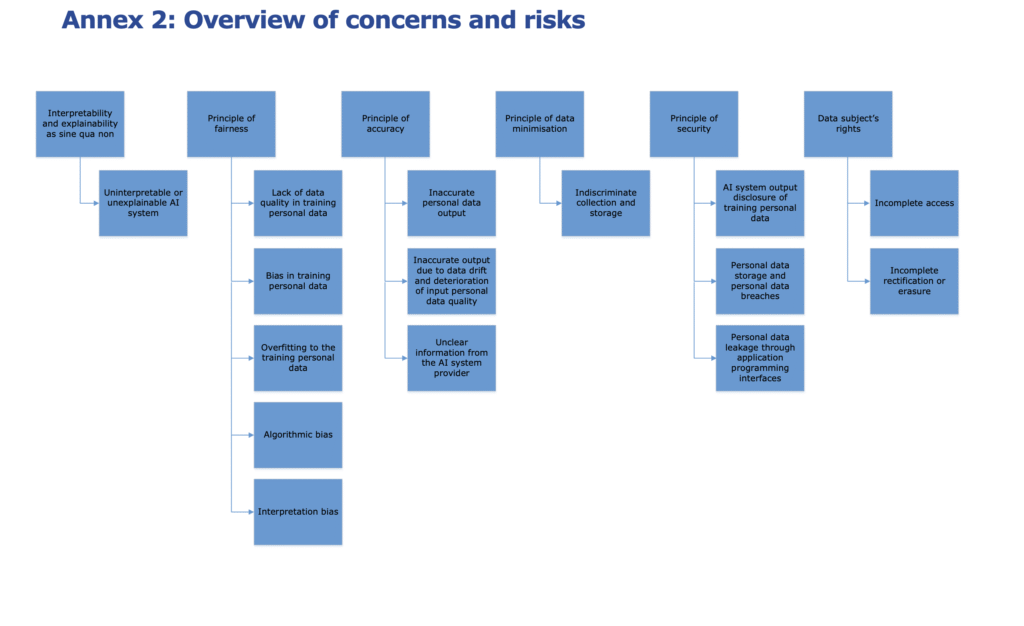

Annex 2: Risks Overview

Concise matrix: Interpretability (unexplainable systems); Fairness (quality/bias/overfitting/algorithmic/interpretation); Accuracy (inaccurate outputs/drift/provider info); Minimization (indiscriminate); Security (disclosure/breaches/API); Rights (incomplete access/rectification).

Annex 3: Lifecycle Checklists

Phase-specific: E.g., Inception—algorithmic bias checks; Data Prep—quality audits, minimization. Tables map risks/measures, e.g.:

| Phase | Principle | Risk |

|---|---|---|

| Inception/Analysis | Fairness | Algorithmic bias |

| Data Acquisition | Fairness | Lack of data quality; Bias in data; Overfitting |

| Verification | Interpretability | Unexplainable system |

These annexes transform theory to toolkit—benchmarks for validation, checklists for audits.

In sum, the EDPS guidance isn’t alarmist; it’s empowering. By systematizing risks, it equips EUIs to harness AI’s promise without sacrificing rights. As debates rage on AI ethics, this document stands as a beacon: Innovation thrives when rooted in accountability. What’s next? Pilot implementations, cross-EUI sharing, and iterative updates to match AI’s pace.