As artificial intelligence becomes embedded into enterprise products, platforms, and services, a growing number of organizations find themselves occupying a dual role: they are both developers of AI systems and providers of those systems to customers or end users. This dual-role position introduces a distinct set of privacy challenges that traditional compliance models were not designed to address.

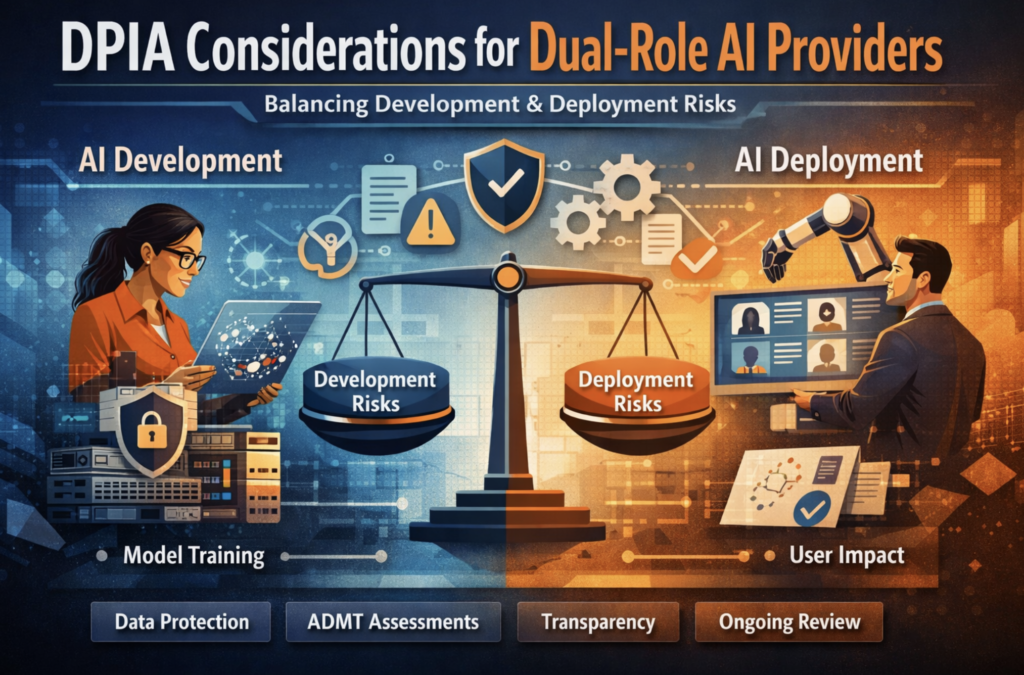

One of the most misunderstood aspects of this challenge is how Data Protection Impact Assessments (DPIAs) should be scoped, structured, and maintained when an organization controls both the creation of an AI model and its downstream deployment. Too often, DPIAs are treated as static documents tied to a single project phase, when in reality they must evolve alongside the AI system itself.

For dual-role AI providers, DPIAs are not simply a regulatory obligation. They are a governance mechanism that connects technical design decisions to legal accountability, ethical risk, and long-term operational resilience.

Understanding the Dual-Role AI Provider Model

A dual-role AI provider typically performs at least two distinct, but interconnected, functions: designing, training, or fine-tuning AI models using personal data; and deploying those models as part of a commercial product, platform, or service.

In practice, this means the organization determines how data is collected, how it is processed during training, how models learn from that data, and how outputs are later generated, shared, or acted upon. This concentration of control increases both responsibility and exposure.

From a privacy perspective, the key issue is that risk does not disappear once training ends. Instead, risk often compounds during deployment, particularly when AI outputs influence decisions about individuals, are reused across multiple customers, or evolve through continuous learning.

DPIAs Are Triggered by Risk, Not by Labels

A common misconception is that DPIAs are required simply because AI is involved. In reality, DPIA obligations arise when data processing activities are likely to result in high risk to individuals’ rights and freedoms. AI frequently meets this threshold because of factors such as scale, opacity, inference capability, and automation.

For dual-role providers, DPIA triggers often occur at multiple stages: during data ingestion and model training; when models are integrated into customer workflows; and when outputs are used to make or support decisions with legal or material effects.

This means a single, narrowly scoped DPIA conducted at the beginning of development is rarely sufficient.

Why Dual-Role Providers Cannot Rely on a Single-Phase Assessment

AI systems are not static products. Models are retrained, features are added, datasets are refreshed, and use cases expand over time. Each of these changes can alter the risk profile in meaningful ways.

A DPIA that only examines the training phase may overlook new categories of personal data introduced post-deployment; secondary uses of AI outputs by customers; model drift that changes accuracy or bias characteristics; and expanded geographic or demographic reach.

For dual-role providers, the DPIA must therefore function as a living governance artifact, not a one-time compliance exercise.

One DPIA or Many? A Practical Approach

Organizations often struggle with whether to conduct separate DPIAs for development and deployment or to consolidate them into a single assessment. In practice, either approach can be defensible if executed properly.

What matters most is clarity and traceability. Regulators expect organizations to demonstrate that they understand what data is processed, why it is processed, what risks arise at each stage, and how those risks are mitigated.

A consolidated DPIA can be effective if it clearly distinguishes between development-stage risks and deployment-stage risks, and explains how mitigation measures carry forward across both contexts.

The DPIA as a Bridge Between Legal and Technical Teams

One of the most valuable functions of a DPIA in a dual-role AI environment is its ability to force structured dialogue between privacy professionals, engineers, and product leaders.

Technical teams often focus on performance metrics, accuracy, and scalability. Privacy teams focus on lawfulness, proportionality, and individual rights. A well-constructed DPIA creates a shared framework where these priorities can be evaluated together rather than in isolation.

When DPIAs are introduced early, they can influence design decisions such as whether certain data attributes are truly necessary; how long training data should be retained; whether explainability features should be built in rather than bolted on later; and how opt-out or appeal mechanisms can be technically supported.

Key Areas of Risk Unique to Dual-Role AI Providers

Dual-role providers face a set of risks that differ from those faced by organizations that merely license third-party AI tools.

These risks include re-identification or inference risks arising from model outputs; function creep as models are reused across new contexts; accountability gaps when customers rely on AI outputs without understanding limitations; and over-collection of training data justified by speculative future use cases.

These risks are amplified when the same organization controls both model architecture and deployment conditions.

What an Effective DPIA Should Actually Contain

An effective DPIA goes beyond generic descriptions and demonstrates real understanding of how the AI system operates in practice. At a minimum, it should document data sources used for training, validation, and testing; categories of individuals affected, directly or indirectly; intended and reasonably foreseeable uses of AI outputs; identified risks to fairness, transparency, and autonomy; and specific technical and organizational mitigation measures.

Crucially, mitigation measures must be realistic. Vague commitments to “monitor outcomes” or “apply appropriate safeguards” are unlikely to withstand scrutiny.

The Role of Ongoing Review and Reassessment

For dual-role AI providers, DPIAs should be revisited whenever there is a material change to the system. This includes significant model updates or retraining; introduction of new data sources; expansion into new markets or customer segments; and changes in how outputs are used or relied upon.

Failure to reassess DPIAs over time can create a false sense of compliance while actual risk steadily increases.

Why DPIAs Are Becoming a Competitive Advantage

As regulators increase scrutiny of AI systems, organizations that treat DPIAs as strategic tools rather than compliance burdens gain a measurable advantage. Well-maintained DPIAs can support faster regulatory responses, smoother audits, and greater customer trust.

They also help organizations identify problems early, before those problems become enforcement actions, reputational damage, or costly redesigns.

Practical Takeaways for Dual-Role AI Providers

- DPIAs are not optional documentation exercises; they are operational risk tools.

- Dual-role AI providers must assess both development and deployment risks.

- A single DPIA can work, but only if it clearly separates risk phases.

- DPIAs must evolve alongside the AI system itself.

- Early collaboration between legal, technical, and product teams is essential.

Common Mistakes to Avoid

- Treating DPIAs as legal paperwork completed after development

- Failing to reassess DPIAs after model changes

- Assuming customer responsibility eliminates provider accountability

- Ignoring downstream uses of AI outputs

- Over-relying on generic templates that do not reflect actual system behavior

CCPA and CPRA Considerations for Dual-Role AI Providers

Dual-role AI providers operating in or touching California residents must treat privacy impact work as more than a GDPR-oriented exercise. The CCPA/CPRA framework creates practical obligations that map directly onto DPIA-style analysis, even when the term “DPIA” is not the label used internally.

In California, the central compliance questions for AI systems tend to converge on: (1) notice and transparency (what data is collected, how it is used, and whether it is disclosed); (2) purpose limitation in practice (whether the use aligns with what was disclosed and reasonably expected); (3) consumer choice controls (sale/sharing opt-outs, limits on sensitive personal information, and preference signal handling where applicable); and (4) data minimization and retention governance (not keeping or using data longer than necessary for disclosed purposes).

For dual-role providers, the “two-phase” nature of AI processing matters under CCPA/CPRA. Training and fine-tuning can involve data flows that look very different from deployment. That distinction is operationally important because the compliance posture for training datasets, model telemetry, and inference logs can be different from the compliance posture for customer-facing product data. Treating everything as one undifferentiated “AI processing activity” is a common root cause of disclosure gaps and consumer rights failures.

Practically, a DPIA-style assessment can help California compliance by forcing teams to map and document: what personal information is used at training time; what is used at inference time; what is shared with customers or third parties; what is retained as logs; and what can be deleted, corrected, or de-identified without breaking the product.

ADMT Risk Assessments and DPIAs

Automated Decision-Making Technology (ADMT) is where privacy impact work often becomes business-critical rather than merely documentary. The moment an AI system is used to meaningfully influence or determine an outcome for an individual, risk escalates across multiple dimensions: transparency, fairness, contestability, accuracy, and over-reliance by human operators.

For dual-role AI providers, ADMT risk assessments should be treated as a specialized extension of the DPIA process. In a dual-role model, the provider may be accountable not only for the model’s design but also for how customers are enabled to use it, what guardrails exist, and what disclosures are realistically feasible. If customers can deploy the provider’s AI in high-impact contexts without sufficient controls, the provider’s risk is rarely limited to contractual language alone.

An ADMT-oriented risk assessment is strongest when it covers both provider-side controls and customer-side enablement: how decisions are explained; how outputs are constrained; how bias and performance are tested; how model changes are communicated; what appeals or human review processes are supported; and what “misuse scenarios” are foreseeable.

As a practical matter, the DPIA can serve as the umbrella document, while the ADMT risk assessment functions as a deep-dive annex that is updated more frequently, particularly as new use cases, integrations, or customer segments emerge.

DPIA Template Tailored to Dual-Role AI Providers

1) Document Control

DPIA Title: [Insert]

System / Product Name: [Insert]

Version / Release Identifier: [Insert]

Owner (Privacy): [Insert]

Owner (Engineering/Product): [Insert]

Date Created: [Insert]

Last Reviewed: [Insert]

Next Scheduled Review: [Insert]

2) Executive Summary

Purpose of the AI system: [Insert plain-language description]

Dual-role description: Explain how the organization acts as both (a) AI developer/trainer and (b) AI provider/deployer.

High-level risk posture: Summarize key risks and whether residual risk remains high, medium, or low after mitigations.

3) Scope and Context

Processing phases in scope: Clearly define what is included for (a) training/fine-tuning and (b) deployment/inference.

Geographies and populations: Identify affected regions and categories of individuals (customers, end users, prospects, minors, employees, etc.).

Use cases: List intended use cases and any reasonably foreseeable secondary uses.

4) Data Mapping and Flow Narrative

Training data sources: [Internal datasets, customer-provided data, public data, licensed data, etc.]

Inference data inputs: [What data is processed when the model is used]

Telemetry and logging: [What is logged, why, retention period, access controls]

Data sharing and disclosures: Identify where data moves to customers, subprocessors, third parties, or affiliates.

Retention schedule: Specify retention by category (training corpora, derived datasets, prompts, outputs, logs, audit artifacts).

5) Necessity and Proportionality Assessment

Necessity: Explain why each major category of data is necessary for the stated purpose.

Alternatives: Identify less intrusive options considered (feature redesign, smaller dataset, de-identification, on-device processing, etc.).

Proportionality: Explain why the benefits justify the data processing scope.

6) Role Analysis (Provider vs. Customer)

Provider responsibilities: List what the provider controls (model design, guardrails, documentation, default settings, monitoring).

Customer responsibilities: List what customers control (use case selection, downstream disclosures, human review processes).

Boundary risks: Identify where responsibility boundaries can fail in real life (misuse scenarios, over-reliance, missing disclosures).

7) Risk Identification

Document risks separately for each phase:

Training/Fine-tuning risks: Data sensitivity, bias introduced by dataset, re-identification risk, provenance/licensing issues, retention overreach.

Deployment/Inference risks: Inference of sensitive attributes, unjustified profiling, inaccurate outputs, automation bias, lack of contestability.

Cross-cutting risks: Security, insider access, vendor/subprocessor risks, and transparency gaps.

8) ADMT-Specific Assessment (Annex or Embedded Section)

Decision impact: Describe whether outputs influence eligibility, pricing, access, ranking, moderation, or other consequential outcomes.

Explainability: What explanations can be provided to users and customers? What cannot be explained and why?

Human review: Where is human review required, recommended, or technically supported?

Appeals/contestability: What mechanisms exist for users to challenge outcomes or request review?

Performance monitoring: How are drift, bias, and error rates monitored post-deployment?

9) CCPA/CPRA Alignment Check

Notice: Confirm disclosures cover training and inference processing in clear terms.

Choice controls: Confirm opt-out, limitation of sensitive personal information (where applicable), and request workflows are operational.

Data minimization and retention: Confirm retention is defensible and documented.

Service provider/contractor alignment: Confirm contractual and operational controls match the organization’s data role(s).

Consumer rights feasibility: Document how deletion, correction, and access requests are handled for relevant datasets and logs.

10) Mitigations and Controls

Technical controls: Access control, encryption, segregation of environments, prompt/output filtering, model guardrails.

Organizational controls: Training, approvals, incident response, vendor oversight, audit logging, policy enforcement.

Product controls: Default settings, customer admin tools, explainability UX, and safe deployment patterns.

11) Residual Risk Decision

Residual risk rating: [High / Medium / Low]

Decision rationale: Explain why the residual risk is acceptable or what gating items remain.

Approvals: Privacy, Security, Product, and Legal sign-off fields.

12) Review Triggers

Specify what events automatically trigger an update, such as: model retraining, new data sources, major feature changes, expansion into a new sensitive use case, new integration partners, or material changes in how outputs are used.

Final Thoughts

For organizations that both build and deploy AI, DPIAs are no longer a theoretical requirement discussed in policy forums. They are a practical necessity that shapes how AI systems are designed, governed, and trusted.

As AI regulation continues to evolve globally and domestically, dual-role providers that invest in thoughtful, well-structured DPIAs will be better positioned to adapt. More importantly, they will be better equipped to deploy AI responsibly in ways that respect individual rights while still enabling innovation.